OpenAI’s Product Lead Reveals the New Playbook for Product-Market Fit in AI Startups

Why the old frameworks are failing in 2025, and how to build AI products that scale (PRD template inside)

By Miqdad Jaffer, OpenAI’s Product Lead.

Product-Market Fit used to be straightforward. Build something people want, validate demand, scale up. But in the age of AI, everything has changed.

The speed of iteration, the complexity of user expectations, and the sheer pace of technological advancement have rendered traditional PMF frameworks obsolete.

I’ve spent the last three years watching many AI startups attempt to achieve PMF.

The ones that succeed aren’t just building better technology, they’re following an entirely new playbook. One that acknowledges a fundamental truth: AI doesn’t just change how we build products; it changes what Product-Market Fit means entirely.

The AI PMF Paradox

Here’s what most founders don’t realize: achieving PMF in the AI era is both easier and harder than ever before.

It’s easier because AI can help you iterate faster, understand users better, and build more personalized solutions than ever before. You can prototype in days, not months. You can analyze user behavior patterns that would have taken armies of analysts to uncover.

It’s harder because user expectations have skyrocketed. Users now expect AI products to be intelligent, predictive, and almost magical in their capabilities. They compare every AI product to ChatGPT, regardless of the use case. The bar for “good enough” has never been higher.

“The biggest mistake I see AI founders make is treating PMF like a checkbox,” I recently shared in our last cohort AI Product Management Certification. “In the AI world, PMF is a moving target. Your users’ definition of ‘intelligent enough’ changes every month as they interact with better AI systems elsewhere.”

This creates what I call the AI PMF Paradox: you need to achieve a fit with a market that’s constantly evolving its expectations of what AI should do.

The Traditional PMF Framework Is Broken for AI

Most PMF frameworks assume a relatively stable problem-solution relationship. You identify a pain point, build a solution, validate with users, and scale. But AI products break this linear progression in three critical ways:

1. The Problem Evolves as Users Learn Traditional products solve known problems. AI products often solve problems users didn’t know they had—or create entirely new workflows they never imagined possible. Your initial problem hypothesis might be completely wrong, not because you misunderstood the market, but because AI unlocked a more valuable use case.

2. The Solution Space Is Infinite With traditional software, you’re constrained by development resources and technical complexity. With AI, the constraints are different—it’s about training data, model capabilities, and prompt engineering. This means your MVP might be incredibly powerful in some areas and surprisingly limited in others, creating unpredictable user experiences.

3. User Expectations Compound Exponentially Once users experience AI that works well in one context, they expect it everywhere. If ChatGPT can understand nuanced requests, why can’t your industry-specific AI tool? This creates a constantly rising bar for what constitutes PMF.

The New AI PMF Framework: 4 Phases to Systematic Success

After studying successful AI products and seeing a bunch of AI Capstone Projects from our AI Product Management certification, I’ve identified a new framework that actually works in the AI era. It’s built around the reality that AI PMF is iterative, data-driven, and requires constant recalibration.

Phase 1: Opportunity Spotting - Finding AI-Native Pain Points

The biggest mistake AI founders make is taking an existing workflow and adding AI on top. That’s not innovation—that’s feature augmentation. True AI PMF starts with identifying pain points that can only be solved through AI’s unique capabilities.

Common Blindspot: The best AI opportunities often look like problems that shouldn’t need solving. Users have developed complex workarounds for limitations that AI can eliminate entirely.

I call these “invisible pain points”—friction that’s so embedded in current workflows that users don’t even recognize it as a problem anymore. In one start-up, I noticed that most developers were spending 40% of their time on routine coding tasks, but they didn’t think of this as a problem—they thought it was “just part of the job.”

How to Spot AI-Native Opportunities:

The foundation of AI PMF is rigorous pain point analysis. Use these five questions to rank which pains are worth solving for—with an AI lens applied to each:

Magnitude: How many people have this pain? AI consideration: Does this pain exist across industries where AI could be applied horizontally?

Frequency: How often do they experience this pain? AI consideration: Is this pain frequent enough to generate the data needed for AI to learn and improve?

Severity: How bad is this pain? AI consideration: Does this pain involve cognitive load, pattern recognition, or decision-making that AI excels at?

Competition: Who else is solving this pain? AI consideration: Are current solutions limited by human constraints that AI could transcend?

Contrast: Is there a big complaint against how your competition is solving this pain? AI consideration: Do users complain about lack of personalization, speed, or intelligence in existing solutions?

This methodical approach ensures you’re not just finding any pain point—you’re identifying pain points that become dramatically easier to solve once you have AI in the loop.

Real Example from the Market: Look at Klarna’s AI assistant launch. They didn’t start by trying to “make customer service better with AI.” They spotted an invisible pain point: customers were waiting 11 minutes on average for simple payment issues that required no human creativity, just access to account information and standard procedures. Their AI assistant now resolves errands in under 2 minutes, handling 2.3 million conversations monthly with the effectiveness of 700 full-time agents. That’s AI-native opportunity spotting: finding workflows that only seem complex because they lack intelligent automation

Phase 2: Build MVP using an AI Product Requirements Document (PRD)

Once you’ve identified a truly AI-native opportunity, traditional product requirements documents fall apart. AI products require a fundamentally different approach to specification, testing, and iteration.

This is where most teams stumble. They try to apply waterfall thinking to systems that are inherently probabilistic. You can’t specify exactly how an AI will behave in every scenario—but you can create frameworks for consistent, valuable outputs.

The AI PRD: Your North Star for Intelligent Products

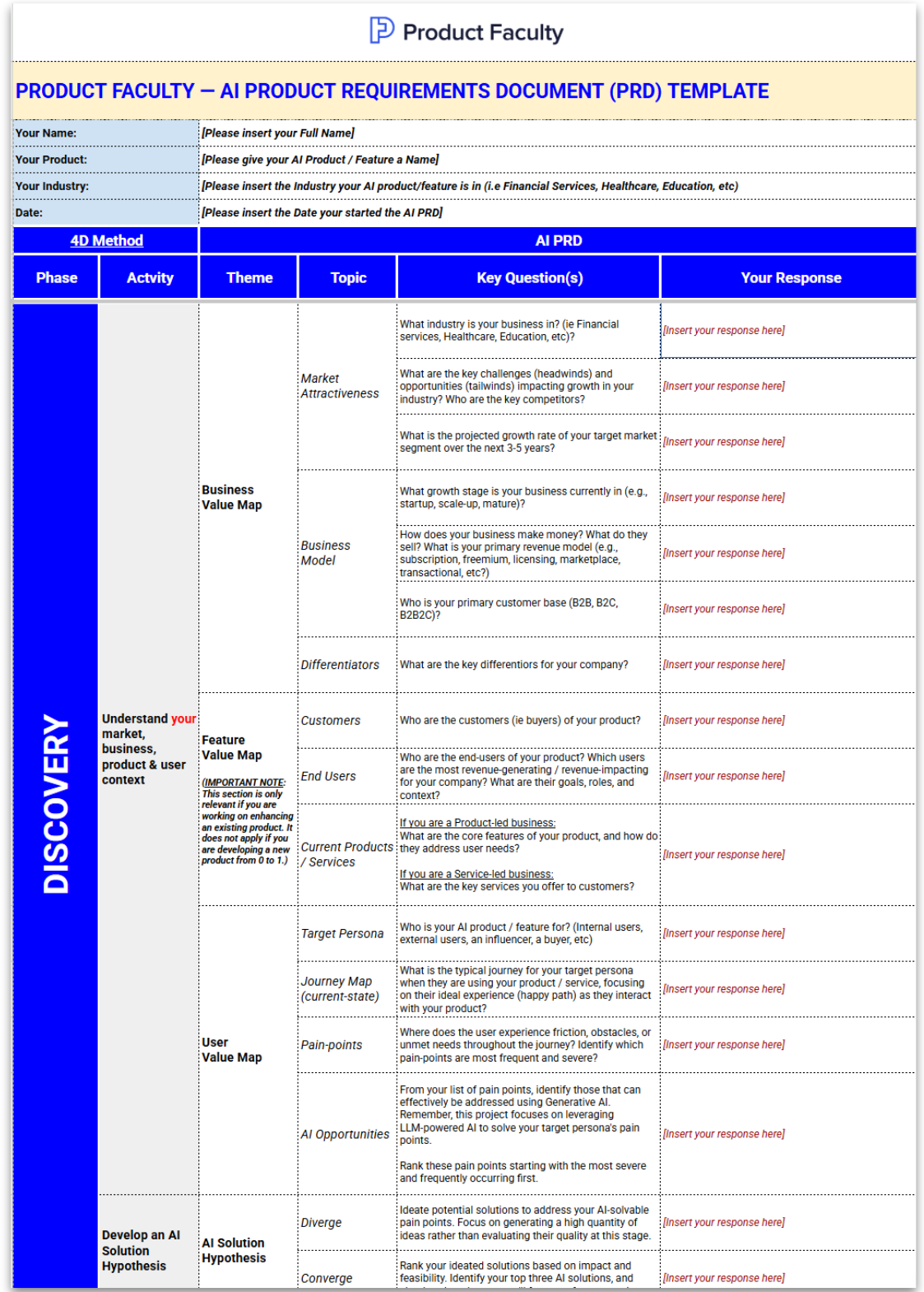

Having collaborated with many AI product teams, I’ve developed the 4D Method for Building AI Products. The core principles of this approach are captured in an AI Product Requirements Document (PRD)—the foundational blueprint of any AI development effort—which I created with Product Faculty. The PRD highlights critical decisions across the four phases of the AI product development lifecycle:

Here’s a summary of the each section of the AI PRD, which you can use to develop your MVP:

1. Discover Phase: Understanding market, business, product, and user context to develop your AI Solution Hypothesis

Map the business value your AI will create

Identify your target persona and their current journey

Spot the specific pain points that AI can uniquely address

Develop a hypothesis for how AI changes the user experience

2. Design Phase: Defining the target state workflow and user experience

Design the future-state workflow with AI integrated

Create wireframes that show AI interactions clearly

Build prototypes that demonstrate AI capabilities

Develop initial prompts and interaction patterns

3. Develop Phase: Building and refining the AI capabilities

Select the right AI model for your use case

Define input specifications and output quality criteria

Iterate on prompt design and system instructions

Prepare data for training or retrieval-augmented generation

Create evaluation sets for testing AI performance

4. Deploy Phase: Launching and scaling your AI product

Finalize launch and rollout strategies

Establish success metrics for both user and AI performance

Set up monitoring and feedback loops

Plan for continuous improvement and iteration

“The AI PRD isn’t just documentation, it’s a forcing function for thinking through all the ways AI can fail,” I explain to product teams. “Traditional PRDs assume deterministic behavior. AI PRDs assume probabilistic behavior and plan accordingly.”

The key insight is that AI products require dual success metrics: traditional user metrics (engagement, retention, conversion) and AI-specific metrics (accuracy, hallucination rates, response quality). You need both to achieve true PMF.

Phase 3: Scale with Strategic Frameworks

Most AI startups hit a wall when they try to scale. Their MVP works beautifully for early adopters, but broader market adoption stalls. This happens because they haven’t thought strategically about their launch readiness across all dimensions.

Scaling an AI product isn’t just about handling more users—it’s about maintaining AI performance at scale, managing data quality across diverse use cases, and ensuring consistent experiences as your model encounters edge cases.

The Launch Strategy Canvas for AI Products

Before scaling any AI product, you need to assess your readiness across four critical dimensions, all reflected in the AI Launch Strategy Canvas template we cover in our AI Product Management Certification class:

Customer Readiness:

Segment size and growth rate in your target market

Customer retention and organic usage frequency

Magnitude of pain you’re solving and user willingness to pay

Product Readiness:

Strength of your unfair advantage (data, model, or market access)

Product’s reach and viral potential

Uniqueness of your AI capabilities vs. competition

Company Readiness:

Technical feasibility of scaling your AI infrastructure

Go-to-market viability and sales process validation

Team’s ability to handle rapid growth and AI complexities

Competition Readiness:

Number and strength of competitors in your space

Barriers to entry for new AI-powered competitors

Supplier power (dependence on model providers like OpenAI)

Each dimension gets scored on a green-yellow-red scale. You only scale when all four are green. This prevents the premature scaling that kills so many AI startups.

Common Blindspot: The biggest scaling challenge for AI products isn’t technical—it’s maintaining quality as you encounter more diverse use cases. Your AI might work perfectly for your initial users but fail spectacularly when new users bring different contexts, vocabularies, or expectations.

Phase 4: Optimize for Sustainable Growth

The final phase is where truly successful AI products separate themselves from the pack. This isn’t about growth hacking—it’s about building sustainable growth loops that make your AI better over time.

Traditional products optimize for conversion funnels and user engagement. AI products must also optimize for model performance, data quality, and user trust. This creates a unique opportunity: AI products can actually get better for existing users as they acquire new users.

The AI Growth Framework:

Data Network Effects: Every user interaction makes your AI smarter for all users

Implement feedback loops that improve model performance

Use user corrections to fine-tune responses

Build systems that learn from successful user outcomes

Intelligence Moats: Your AI’s performance becomes your competitive advantage

Develop proprietary datasets that competitors can’t replicate

Create AI workflows that are uniquely valuable in your domain

Build user interfaces that make your AI’s capabilities more accessible

Trust Compounding: User confidence in your AI drives organic growth

Maintain consistent quality standards as you scale

Provide clear explanations for AI decisions

Handle edge cases gracefully and transparently

“The most successful AI products I’ve seen don’t just solve problems—they get smarter at solving problems over time,” I often tell founders. “That’s your ultimate competitive moat.” AI products that achieve true PMF create compounding advantages that traditional software simply can’t match.

Every user interaction improves your model. Every edge case you handle makes your AI more robust. Every successful outcome strengthens user trust and drives organic growth. This is why AI PMF, when done right, can create nearly unassailable competitive positions.

“The companies that master AI PMF won’t just win their initial markets,” I predict. “They’ll expand into adjacent markets faster than any traditional software company ever could, because their AI gets smarter across domains.”

Concluding Thoughts

Achieving Product-Market Fit in the age of AI requires new frameworks, new metrics, and new ways of thinking about user value. The traditional playbooks aren’t just outdated—they’re counterproductive.

The founders who master these new approaches will build the defining companies of the next decade. Those who don’t will find themselves consistently outmaneuvered by competitors who understand how to harness AI’s unique properties for sustainable competitive advantage.

The frameworks I’ve outlined here—from AI-native opportunity spotting through systematic optimization—represents the distilled lessons from hundreds of AI product launches. It’s not theoretical; it’s battle-tested by teams building the AI products that are reshaping entire industries.

Every month, I watch another “AI-powered” startup fail because they applied yesterday’s PMF playbook to tomorrow’s technology. The winners aren’t the ones with the best models—they’re the ones who understand that AI PMF is a fundamentally different game with fundamentally different rules.

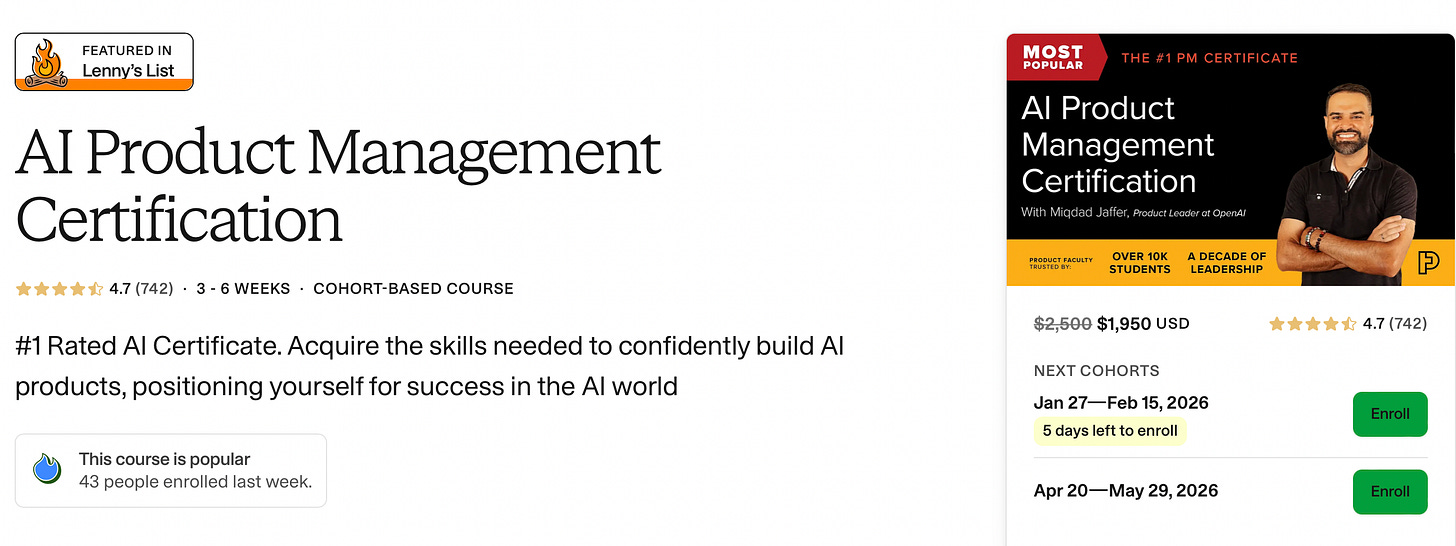

Thanks to Product faculty’s #1 AI Product Management Certification for making this deep dive free for everyone!

Inside the cohort, you’ll:

1. Master the full AI product lifecycle: Discover → Design → Develop → Deploy

2. Learn RAG, fine-tuning, evals, and agentic AI

3. Build scalable, production-ready AI products, not just basic prototyping

4. 3,000+ AI PMs graduated

5. 740+ reviews - highest on Maven.

Go here for $500 off: Click here to enrol.

If you didn’t get the chance to read the last Newsletter, click below:

The AI Product Builder’s Canon: The New Laws of Building AI Products — The 101 guide that explains the real machinery behind LLMs, diffusion models, embeddings, planning systems, autonomy, and the rise of a new kind of product management (and what you need to do).

This is great. Do you mind if I share it amongst my audience. Will give you credit ofcourse

Love this!