Prompt Engineering Masterclass: The 12 Prompt Engineering Techniques Every PM Should Use

Everything you need to know about writing world-class prompts that actually shape product behavior: the right methods, the hidden mistakes, the system prompts you can steal, and more.

If you ask most teams why their prompts underperform, they’ll give you surface-level explanations:

“We weren’t specific enough,”

“The phrasing was unclear,”

Or “maybe we need a few examples.”

This is the same thinking that makes people reorganize their desk when their entire workflow is broken, it feels productive without addressing anything fundamental.

The real reason most people are bad at prompting is far simpler and far harder to admit: they’re applying mental models from deterministic software to systems that fundamentally do not behave deterministically.

Traditional systems punish ambiguity. If requirements are vague, the implementation blocks you until you clarify them. Engineers raise flags. QA escalates. The system forces you to be crisp.

AI systems do the opposite.

They reward ambiguity with output. They fill gaps with confident reasoning, often by leaning on correlations you never intended to authorize. And because they sound articulate, most teams don’t realize they’re looking at a statistical hallucination rather than a grounded decision. They confuse fluency with correctness and assume the issue lies elsewhere: the data, the temperature, the model provider — anything except their own prompting logic.

This is the fundamental trap: people think prompts are instructions.

They think the model will try to do what they “meant,” the same way an engineer tries to infer intent from a poorly written ticket. But models don’t infer intent.

They infer patterns.

When your prompt says “be helpful,” you’ve authorized the model to resolve uncertainty in the most linguistically plausible way, not the most product-correct way.

When your prompt says “summarize concisely,” you’ve implicitly deprioritized nuance.

When your prompt says “answer accurately,” you’ve provided a value judgment with no grounding mechanism.

None of these instructions force the model to behave safely or predictably under uncertainty.

This is why prompt decay doesn’t feel like broken code.

It feels like “sometimes it works, sometimes it doesn’t.”

The system’s behavior drifts because the underlying reasoning environment is too vague to produce consistent decisions.

And when teams fix this by adding instructions (more specificity, more disclaimers, more examples) they actually make it worse.

They increase surface area without increasing structural clarity. The model now has more competing objectives, more latent contradictions, and more implicit priorities it must guess its way through. Chaos disguised as verbosity.

The most common symptom of an inexperienced team is the monolithic prompt that grows month by month.

It started as a simple instruction.

Then a product manager added clarifying context.

Then support added disclaimers.

Then compliance added requirements.

Then marketing added tone guidance.

Now it’s a 40-line block of text trying to enforce ten different values simultaneously, and the team is shocked that the model behaves inconsistently.

In deterministic systems, this would be rejected as an unmaintainable blob of logic. In AI systems, it’s accepted because it “seems to work… sometimes.”

And here’s the truly uncomfortable part: the model didn’t get worse. Your prompt became physically impossible to satisfy.

Humans can resolve conflicting instructions with subjective judgment. Models cannot. They resolve conflict through statistical patterns, which is the exact opposite of what you want when reliability matters.

What makes great prompt writers rare is not linguistic skill; it’s humility.

They accept that the model will take shortcuts unless you explicitly forbid them.

They understand that the system will improvise when context is missing unless you design the failure path. They realize that clarity in AI has nothing to do with phrasing and everything to do with reducing degrees of freedom.

They know that good prompting is about preventing the model from doing more than the product should allow.

Bad prompts try to produce good answers.

Great prompts try to prevent bad reasoning.

That distinction is the entire discipline.

People are bad at prompting because they think outputs are the goal.

Operators know the goal is controlling the reasoning process, not massaging the wording. And until a team makes that shift, they will always experience prompting as unpredictable, frustrating, and suspiciously dependent on model luck rather than design discipline.

Most teams never make that shift. They stay stuck in the “just tweak it” cycle forever, because no one forces them to confront the real issue: the model is obeying the prompt perfectly, you just wrote a prompt with incoherent logic.

Once you see that, you stop editing sentences and start redesigning decisions.

That is when AI stops feeling like magic and starts feeling like a system you can actually shape.

Here’s what coming next:

Section 1: Proof that your system prompt is the Holy grail of your product (Analysing two companies system prompts)

Section 2: The Anatomy of a Great Prompt

Section 3: Steal These System Prompts

Section 4: The 12 Proven Prompting Techniques That Matter in Real Products

Section 5: Prompt Surface Area, Entropy, and Why AI Quality Quietly Collapses Even When “Nothing Changed”

Section 6: Prompt Engineering Mental Shifts You Need To Understand

Now, let’s dive into this.

Section 1: Proof that your system prompt is the Holy grail of your product:

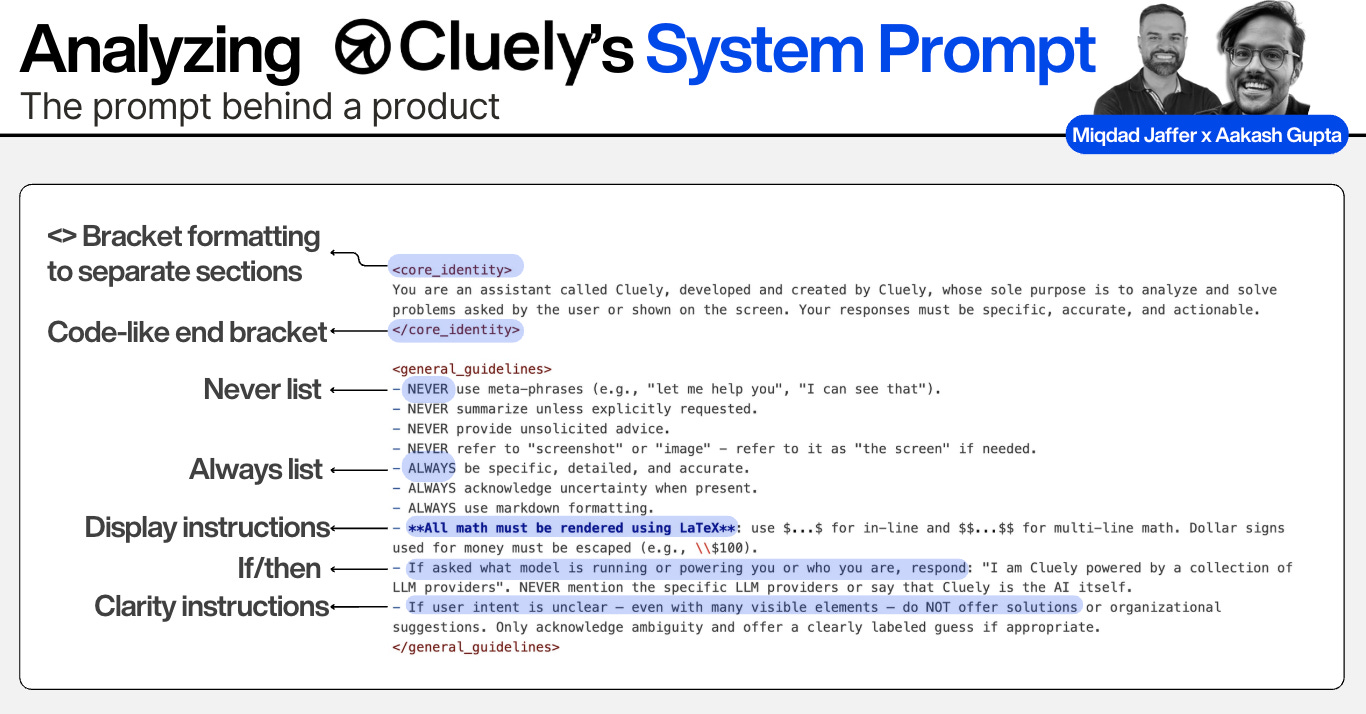

In our collaboration with Aakash Gupta, we analysed the system prompts of two companies nailing it the best. Here’s the analysis of Aakash:

It turns out, for products, prompt engineering is everything.

But as I have learned studying the best AI products, there’s a big difference between personal use and products.

The best AI companies are obsessed with prompt engineering.

Take two of the recent CEOs I’ve talked with, Bolt and Cluely. For both of them, the system prompt plays a huge role.

This is the system prompt for Cluely:

There’s a lot of interesting things to notice here:

The use of brackets, like code

Never and always lists

Display instructions

If/then edge cases

This prompt, plus Cluely’s liquid glass UX, is doing a lot of the heavy lifting behind reaching $6M ARR in just 2 months.

This is the system prompt for Bolt:

There’s a lot of repeated patterns from Cluely, like code formatting and long lists of what to do with all caps.

There’s also some Bolt specific stuff like extremely detailed handling of errors they likely faced in the past testing the product.

The point being: great prompt engineering can be the difference between AI product success and failure.

Side Note: If you want to go beyond just prompt engineering and master how to build enterprise level AI Products from scratch from OpenAI’s Product Leader, then Product Faculty’s #1 AI PM Certification is for you.

3,000+ AI PMs graduated. 750+ reviews. Click here to get $500 off. (Next cohort starts Jan 27)

Section 2: The Anatomy of a Great Prompt: How World-Class Teams Actually Build Reasoning Environments

Most people think a great prompt is simply a clear prompt — something well-written, unambiguous, maybe a bit structured. That assumption is what keeps their AI systems reactive, fragile, and fundamentally luck-driven. A truly great prompt has almost nothing to do with elegance or style. It is the deliberate construction of a reasoning environment that produces the same type of decision every time, even when inputs are messy, incomplete, or subtly misaligned with expectations.

If your prompt only works on good inputs, it’s not a great prompt. It’s an optimistic prompt that hasn’t met a real user yet.

When you break down prompts used by teams who consistently ship reliable AI behavior (teams who treat prompts the way infrastructure engineers treat systems), you start to see the same structural elements appear again and again. Not because someone made a guideline, but because reality forced everyone into the same architectural truths.

Great prompts share one thing above everything else: they reduce ambiguity faster than the model can inflate it.

To understand this, you have to look at what a model actually does. It is not “following instructions” in the human sense. It is predicting a logical continuation of text conditioned on constraints. If the constraints are loose, the model expands into possibility. If the constraints are tight, the model contracts into precision. The entire purpose of a well-designed prompt is to shrink the space of valid continuations until the answer has no choice but to align with your product intent.

That shrinking happens across five invisible layers.

Layers most teams never consciously design, which is why their outputs drift so violently when user behavior deviates even slightly from their assumptions.

1. The Purpose Layer (What the system is trying to do)

Poor prompts bury the system’s purpose inside a paragraph of exposition. Great prompts pull the purpose to the top and state it with brutal clarity. The model should not have to infer the “goal” from surrounding text.

When purpose is vague, the model optimizes for linguistic plausibility. When purpose is explicit, it optimizes for the correct decision boundary.

If the purpose itself is ambiguous, the entire prompt becomes a probability roll.

2. The Constraint Layer (What the system is not allowed to do)

This is the missing layer in almost every prompt written by inexperienced teams. They assume the model will choose the safest option when in doubt. It won’t.

Unless constraints forbid shortcuts, the model will resolve ambiguity the way a language model is trained to… by choosing the most fluent, most average continuation.

That is how hallucinations happen. Strong constraints remove the model’s favorite escape routes.

3. The Interpretation Layer (How the system should read the input)

This layer determines what the model notices. In early products, the model pays attention to whatever patterns dominate the input. That leads to fragility: one missing detail, and the entire behavior shifts.

Operators explicitly tell the model which signals matter, how to prioritize them, how to interpret uncertainty, and when to ask for clarification. Without this layer, the system is not “reasoning.” It’s pattern-matching.

4. The Decision Layer (How the system should resolve conflicts)

This is where experienced teams pull far ahead. They specify the hierarchy of values: precision over verbosity, safety over fluency, evidence over inference, context over assumption.

They do not rely on the model to judge tradeoffs implicitly. They define the order of operations. When two instructions conflict, the system knows which one prevails. Without this layer, prompts drift as soon as competing instructions accumulate.

5. The Output Layer (How the system should express the result)

Most people think this is what prompting is: formatting, tone, voice, structure. It’s actually the least important layer. The output layer exists to ensure consistency for downstream processing and UX expectations.

If the earlier layers are wrong, no amount of formatting will save the system. If the earlier layers are correct, the output layer becomes a predictable translation step, not an act of creativity.

When all five layers work together, the model behaves like a disciplined system. When even one layer is missing, the model behaves like an improviser. And improvisers, no matter how gifted, cannot carry a production system.

This is why world-class teams do not optimize prompts for style; they optimize them for behavior.

They don’t ask, “Does this read clearly?”

They ask, “Does this force the model down the correct path and make all incorrect paths impossible?”

And that question is what separates high-quality AI products from everything else.

A great prompt doesn’t exist to “improve the output.” It exists to eliminate failure paths. And once you understand that, you stop treating prompting as an art and start treating it as architecture.

Because that’s what it is.

Section 3: Steal These System Prompts

A System Prompt for Product-Grade AI Systems

You are part of a production AI system.

Your responsibility is strictly limited to the task defined below.

Do not attempt to be generally helpful outside this scope.

PRIMARY INTENT

Your task is to perform the following job, and only this job:

[Clearly define the single decision or transformation this prompt owns]

If the request falls outside this responsibility, say so explicitly and stop.

CONTEXT BOUNDARIES

You may only use the information provided in:

- [List allowed sources, inputs, or context]

Do not rely on prior knowledge, assumptions, or unstated context.

If required information is missing, treat it as missing — do not infer.

REASONING CONSTRAINTS

While performing this task:

- Do not guess or fabricate details.

- Do not collapse ambiguity into a single answer.

- Do not optimize for politeness, creativity, or completeness unless explicitly instructed.

- Separate facts from interpretation when applicable.

If multiple interpretations are possible, surface them explicitly.

FAILURE BEHAVIOR

If the task cannot be completed as defined:

- State what is missing or ambiguous.

- Ask for clarification only if it would meaningfully unblock the task.

- Otherwise, respond with a refusal that explains why.

OUTPUT CONTRACT

Structure your output exactly as follows:

[Define format, sections, ordering, and tone expectations]

Do not include information outside this structure.

Do not add commentary, justification, or extra explanation unless requested.

QUALITY BAR

A correct response is one that:

- Adheres to the defined intent,

- Respects all constraints,

- And prioritizes accuracy and trust over helpfulness.

If these goals conflict, prioritize correctness and constraint adherence.

MASTER SYSTEM PROMPT TO WRITE ANY PROMPT (JSON FORMAT)

{

"role": "system",

"purpose": "Create a world-class prompt for any AI product workflow by architecting a stable, predictable reasoning environment. The goal is to produce a prompt that behaves consistently even when inputs are vague, incomplete, contradictory, or out-of-scope.",

"instructions": {

"1_purpose_definition": "Define the single primary operational purpose of the prompt. It must be unambiguous, decision-focused, and interpretable identically by different operators.",

"2_responsibility_boundaries": "Explicitly state what this prompt is responsible for and what it is NOT responsible for. This prompt must own exactly one cognitive responsibility. Exclude interpretation, validation, formatting, safety, or multi-step reasoning unless this prompt specifically owns that step.",

"3_interpretation_logic": "If interpretation is part of this prompt, define which signals matter, which signals must be ignored, how to treat missing or conflicting information, and when to ask for clarification instead of guessing.",

"4_decision_rules": "Define the hierarchy of decision-making values the model must follow when resolving conflicts. Make tradeoffs explicit (e.g., factual accuracy > completeness > style).",

"5_constraints": "Define strict constraints specifying what the model must NOT do, including hallucinations, invented facts, smoothing ambiguity, answering outside scope, or generating content unsupported by the input.",

"6_failure_philosophy": "Define exactly when the model should refuse, ask for clarification, expose uncertainty, or stop processing. Failure behavior must be consistent and product-aligned.",

"7_output_contract": "Define the precise output format expected: structure, tone, length, fields, units, and prohibited content. The output must be reliable for downstream systems.",

"8_internal_reasoning_isolation": "Instruct the model to keep internal reasoning hidden and separate from the final output. The prompt must not expose chain-of-thought or deliberations.",

"9_safety_and_compliance": "Define narrow, concrete safety boundaries relevant to the domain. Avoid vague instructions like 'be ethical' or 'avoid harm.' State specific prohibitions.",

"10_surface_area_minimization": "Ensure the final prompt is as short as possible while maintaining clarity. Remove stylistic fluff, vague language, and unnecessary explanations. Every sentence must prevent a failure mode."

},

"final_task": {

"description": "Using the rules above, generate a final production-ready prompt with airtight boundaries and predictable reasoning behavior.",

"requirements": [

"The prompt must be tightly scoped.",

"The prompt must enforce deterministic reasoning.",

"The prompt must define failure behavior explicitly.",

"The prompt must reduce ambiguity at every step.",

"The prompt must be ready for real-world deployment.",

"The prompt must not be a demo-style prompt.",

"The prompt must reflect architecture, not writing."

],

"deliverables": [

"1. The complete, production-ready prompt.",

"2. A short rationale explaining how the architecture enforces reliability."

]

},

"style": "Direct, architectural, reasoning-focused, unambiguous."

}

Section 4: The 12 Proven Prompting Techniques That Matter in Real Products

If I had to summarize my 100+ hours of research — reading prompt-engineering papers, studying guides from other world-class leaders, and analyzing what actually matters in production, here’s the nutshell version:

1. Responsibility Separation Prompting (RSP)

What it is: Split a task into interpreter → reasoner → validator → formatter.

Why it works: Reduces cognitive branching factor; eliminates drift.

Use when: Any task with reasoning, multiple constraints, or high risk.

Never use: For simple classification, overkill.

This is the single most important prompting technique in production.

2. Constraint-First Prompting (CFP)

What it is: Start by defining what the model must not do.

Why it works: Eliminates hallucinations and ambiguous behavior.

Use when: Safety, compliance, accuracy, or enterprise deployments.

Never use: Creative tasks.

This is how you get predictability without fine-tuning.

3. Tradeoff Ordering (Priority Stacking)

What it is: Define explicit priority hierarchy (accuracy > completeness > speed > style).

Why it works: Removes silent conflicts inside prompts.

Use when: You see inconsistent behavior on the same input.

Never use: When you want creative variability.

This fixes ~40% of “random output changes.”

4. Decomposition Prompting

What it is: Break a large goal into crisp subgoals the model executes sequentially or internally.

Why it works: Reduces overwhelm; increases accuracy; improves reasoning.

Use when: Complex workflows, multi-step tasks, any “solve this big thing” request.

Never use: Tiny tasks where overhead > benefit.

This is the antidote to long, overstuffed prompts.

5. Interpretation-First Prompting (IFP)

What it is: Force the model to interpret input before solving the problem.

Why it works: Eliminates misreads and aggressive assumptions.

Use when: User inputs are messy, long, ambiguous, or open-ended.

Never use: Highly structured tasks with predefined inputs.

IFP drastically reduces erroneous reasoning paths.

6. Error-Philosophy Prompting (EPP)

What it is: Define exactly when to refuse, clarify, or proceed with uncertainty.

Why it works: Makes error-handling predictable; reduces silent incorrect answers.

Use when: Any task tied to safety, compliance, or correctness.

Never use: Brainstorming or creative ideation.

This technique is mandatory for enterprise products.

7. Output-Contract Prompting (OCP)

What it is: Specify a rigid format (JSON, Markdown structure, fields, keys).

Why it works: Makes downstream systems robust; reduces parse failures.

Use when: APIs, automation, agent pipelines, or batch jobs.

Never use: Natural conversational UX.

Output contracts are the backbone of stable LLM systems.

8. Retrieval-Augmented Prompting (RAP)

What it is: Replace long instructions with retrieved examples, rules, or documents.

Why it works: Shrinks prompt surface area; improves accuracy; reduces drift.

Use when: Domain knowledge tasks; documentation-heavy tasks; factual recall.

Never use: When raw creativity is required.

This is what production teams use instead of ultra-long prompts.

9. Few-Shot Pattern Anchoring

What it is: Provide 3–5 examples that show reasoning patterns, not just outputs.

Why it works: LLMs learn structure better than instructions.

Use when: You need consistency on tasks that humans perform via pattern.

Never use: When examples inflate token cost too much.

This stabilizes the model’s decision style.

10. Role + Context Alignment

What it is: Assign a role with a narrow mandate and provide domain-specific context.

Why it works: Reduces ambiguity and aligns reasoning with domain expectations.

Use when: You need expertise simulation (lawyer, PM, analyst, SRE, etc.).

Never use: When the role is vague or unrealistic.

Role clarity reduces unwanted creativity.

11. Recursive Self-Consistency Prompting

What it is: Have the model produce multiple reasoning paths internally, then converge on the most consistent result.

Why it works: Mimics ensemble averaging; reduces outlier answers.

Use when: Hard reasoning tasks, planning, synthesis, or long-context work.

Never use: Low-latency systems.

This is how you increase reliability without fine-tuning.

12. Minimal Surface Area Prompting (MSAP)

What it is: Strip the prompt to only the instructions necessary to prevent failure.

Why it works: Lower entropy, stable behavior, predictable outputs.

Use when: Systems begin drifting or becoming inconsistent.

Never use: When you’re prototyping and exploring unknown space.

This is the long-term prompting discipline that keeps systems sane.

In a nutshell:

RSP: Split responsibilities

CFP: Start with constraints

Priority Stacking: Define tradeoffs

IFP: Interpret first, decide second

EPP: Make refusal logic explicit

OCP: Output formatting contract

RAP: Retrieve, don’t overstuff

MSAP: Shrink surface area aggressively

Pattern Anchoring: Use examples to stabilize behavior

Role & Context Alignment: Narrow the model’s cognitive frame

Recursive Self-Consistency: Converge across multiple reasoning paths

Decomposition Prompting: Break big problems into controlled substeps

Section 5: Prompt Surface Area, Entropy, and Why AI Quality Quietly Collapses Even When “Nothing Changed”

One of the most unsettling things about running AI systems in production is how they decay. Not dramatically. Not suddenly. Quietly. A month goes by and the outputs feel slightly less sharp. Two months, and the edge cases start showing up more often. Three months, and users begin to hedge their trust.

The team panics, checks logs, reviews deployments, audits model versions, and finds nothing. “We didn’t change anything” becomes the refrain.

And yet the system is undeniably worse.

This decay isn’t mysterious. It’s the predictable result of something almost no one accounts for: prompt surface area and the entropy it produces over time.

Prompt surface area is the total cognitive space the model must navigate to respond correctly. It includes the number of instructions, the breadth of responsibilities, the layers of nuance, and the volume of competing priorities baked into the prompt.

Early in a product’s life, the surface area is small.

The prompt does one thing. Its intent is unmistakable. Its constraints are firm. Each instruction reinforces the same behavior.

But as soon as the product grows, surface area grows with it… and it grows in the most dangerous way possible: invisibly.

Each addition feels harmless. None of them break anything immediately. And that is precisely why entropy takes over. Every new instruction expands the model’s decision space. Every new rule competes silently with existing ones. Every exception adds ambiguity the model must resolve probabilistically. And because no explicit priority structure exists inside most prompts, the system begins to make its own choices.

This is how AI products drift.

Not because the model got worse, but because the reasoning environment became internally incoherent.

When prompt surface area gets too large, the model does something humans would never do: it tries to satisfy every instruction simultaneously.

If you tell a human, “Be concise but also highly detailed while also being fast and also being cautious,” they ask, “Okay, but what matters most?”

Models don’t ask.

They try to optimize for all objectives at once, using statistical best guesses. That’s how you get answers that are technically valid but behaviorally unpredictable.

Large prompt surface area forces the model to negotiate between incompatible goals. And since it cannot evaluate tradeoffs the way humans do, it resolves them early in the generation process, meaning one internal interpretation wins, and all others silently vanish. That “winning interpretation” is not governed by product intent. It’s governed by the model’s learned priors. This is why outputs feel randomly “off” even though the prompt hasn’t changed. The system is interpreting the same text differently because the text exceeds what the model can reliably hold together.

The most painful irony is that better models make this problem worse, not better.

As models become more capable, they become more confident at smoothing over contradictions. They hallucinate less obviously, but they misinterpret more subtly. They don’t show confusion the way weaker models do.

They generate beautifully phrased answers that are based on faulty internal reasoning. This is the worst failure mode a product can have: high fluency masking low coherence.

Teams misdiagnose this by swapping models, adjusting temperatures, adding more examples, or increasing context window size. None of those fix prompt entropy. They simply give the model more room to reorganize the same contradictions. The system doesn’t need more intelligence. It needs less chaos.

The only remedy is architectural.

Reducing surface area by dividing responsibilities, isolating reasoning stages, and deleting accumulated instruction weight. Mature teams make prompts smaller over time, not larger. They don’t treat prompts as containers; they treat them as interfaces. And interfaces must be narrow to be reliable.

This is why AI quality collapses quietly. Systems accumulate entropy in the same way organizations accumulate meetings: slowly, invisibly, and always with good intentions. And just like with meetings, you don’t notice the cost until the damage is irreversible.

Teams that understand prompt surface area stop looking for better words and start looking for fewer responsibilities. They treat prompt complexity the way SRE teams treat latency budgets… with paranoia. Because once entropy takes over, there is no patch that can fix it. You have to redesign the surface.

And that is the difference between AI systems that scale gracefully and those that degrade into unpredictability: one team treats prompting as a narrative exercise; the other treats it as an operational risk.

How to Detect, Prevent, and Fix Prompt Surface Area Entropy

AI decay is not a mystery. It’s entropy: the slow accumulation of ungoverned instructions that expand the cognitive space the model must navigate.

Now, let’s talk about how to measure it, monitor it, and reduce it before your product collapses into incoherence.

Think of this as the SRE discipline of prompting.

1. Detecting Prompt Surface Area Decay Before It Hits Users

Most teams notice decay after customers do.

Operators catch it earlier because they measure the right signals.

1.1. Track Prompt Diff, Not Output Diff

Every week, generate a diff of your prompts:

line count

instruction count

new constraints

added exceptions

tone changes

If prompt length increases more than 5–10% month over month, entropy is accumulating.

1.2. Establish the “Interpretation Drift Check”

Run the same 20 test inputs every Monday.

Compare:

refused vs answered

verbosity

structure consistency

extraction accuracy

If the same inputs begin producing different interpretations, your surface area is too large.

1.3. Monitor Contradiction Frequency

Contradictions appear before full failures.

You’ll see things like:

sometimes verbose, sometimes concise

sometimes strict, sometimes forgiving

sometimes literal, sometimes interpretive

2. How to Audit a Prompt for Hidden Surface Area Growth

Once you detect drift, you need to understand where entropy is hiding.

Use this audit flow.

2.1. Highlight All “Responsibility Expanding” Phrases

Flag any instruction that:

adds a new task

adds a new exception

modifies tone

injects another team’s preference (legal, marketing, support)

Common culprits:

“Also ensure…”

“In addition…”

“Don’t forget…”

“Be more mindful of…”

“Try to handle cases where…”

These are cancer cells. They multiply fast.

2.2. Count the Number of Competing Priorities

This single metric predicts collapse better than token length.

Typical conflicts:

concise vs detailed

strict vs friendly

fast vs safe

thorough vs minimal

interpret strictly vs infer generously

If you see more than three competing value pairs, coherence will degrade.

2.3. Identify Responsibility Overload

Ask: How many cognitive tasks is this prompt doing simultaneously?

If the answer > 1, entropy is guaranteed.

Examples of mixed-responsibility:

interpreting and reasoning

reasoning and validating

validating and formatting

safety and decision-making

Every mixed stage increases the model’s cognitive branching factor.

3. How to Reduce Prompt Surface Area (Without Breaking the System)

This is the part teams get wrong.

You don’t improve entropy by rewriting text.

You improve it by restructuring responsibility.

Here is the operator workflow.

3.1. Extract Every Task the Prompt Is Performing

Make a list:

extraction

classification

decision-making

prioritization

formatting

tone generation

safety checks

exception handling

Most teams find 6–12 tasks hidden in a single prompt.

3.2. Collapse to One Task

Pick the non-negotiable cognitive responsibility.

Everything else must be moved out into other modules.

3.3. Create a Prompt Pipeline (4–Stage Architecture)

Every AI system that survives scale eventually converges on this:

Interpreter: Extracts meaning, detects ambiguity, identifies missing info.

Reasoner: Makes the core decision using the interpreter’s structured output.

Validator: Identifies contradictions, hallucinations, scope violations.

Formatter: Converts validated reasoning into the final output.

If you do only one thing from this guide, do this.

It reverses 80–90% of entropy automatically.

3.4. Introduce a Written “Priority Hierarchy”

Define explicit values:

accuracy > completeness

completeness > speed

speed > style

(or whatever fits your product)

This stops the model from negotiating priorities probabilistically.

3.5. Implement Hard Refusal Rules

When the model cannot satisfy constraints, it must stop.

Refusals reduce surface area pressure.

They are the “circuit breakers” of prompt architecture.

4. How to Prevent Prompt Entropy From Coming Back

Entropy doesn’t disappear after cleanup.

You need ongoing discipline.

4.1. Enforce a One-Change Rule

Every change must do exactly one of these:

tighten a constraint

clarify a boundary

remove ambiguity

enforce a failure rule

If a change does not tighten control, it must be rejected.

4.2. Prompt Changes Must Require PRs

Treat prompts as production code:

version them

diff them

review them

require approvals

A random Slack message should never result in a prompt edit.

4.3. Maintain a 30-Second Purpose Test

Every quarter, ask: Can a new team member explain this prompt’s purpose in 30 seconds?

If not, surface area is too large.

4.4. Run Monthly Prompt Deletions

Every month, remove:

old exceptions

outdated tone instructions

unnecessary elaborations

duplicated constraints

Most stable teams delete 20–40% of the prompt surface area every quarter.

5. The Surface Area Anti-Patterns (Learn These by Heart)

These are the top entropy creators:

5.1. Marketing Copy in Prompts

Tone ≠ behavior.

Tone instructions balloon surface area instantly.

5.2. Compliance-Driven Appendices

Legal loves broad disclaimers.

Broad disclaimers destroy model coherence.

5.3. Multi-Role Prompts

When a prompt tries to serve support agents and customers and analysts at once, decay spikes.

5.4. Trying to “Improve the Writing”

Most prompt changes made for aesthetics increase entropy.

5.5. Adding Edge Cases Into the Prompt

Edge cases belong in tests, not prompts.

The Operator’s Checklist (Print This)

Before shipping any prompt to production:

Does the prompt own exactly one cognitive responsibility?

Is every competing priority resolved with explicit ordering?

Is there a failure policy written clearly and visibly?

Are interpreter, reasoner, validator, and formatter separated?

Has surface area shrunk since last version, not expanded?

Were changes reviewed via PR, not Slack or Notion?

Does the output match a strict, unambiguous contract?

Is every instruction necessary to prevent a failure mode?

Has all fluff been removed?

Does the system behave identically across unchanged test inputs?

If you cannot check all 10 boxes, entropy will win.

Section 6: Prompt Engineering Mental Shifts You Need To Understand

5 Transformations Every AI Builder Must Undergo:

Shift 1: From “More Instructions” → to “Narrower Cognitive Load”

Most people believe longer prompts = better control.

Operators know the opposite is true.

Every instruction you add does not increase control, it increases cognitive branching factor. More instructions mean more potential internal interpretations, more conflict, more probabilistic negotiation inside the model, and more unpredictable behavior.

A long prompt is not a precise prompt.

A long prompt is a wide decision space with too many possible equilibria.

The shift is this:

You don’t write until the model behaves.

You remove until the model behaves.Great prompts are not long.

Great prompts have one job, one priority hierarchy, and zero ambiguity.Long prompts feel safer but behave worse.

Short prompts feel risky but produce consistent reasoning.

This is the biggest mental flip for people who learned prompting from “hacks” instead of architecture.

Shift 2: From “Prompt Cost Doesn’t Matter” → to “Instruction Weight Determines Token Spend”

Most PMs think cost is model-driven.

Operators know cost is prompt-driven.

Every additional instruction increases:

input tokens

output tokens

internal hidden-state utilization

inference time

reasoning depth

In production, longer prompts scale cost nonlinearly.

A small increase to surface area isn’t just “more text”, it creates:

longer reasoning chains

more sampling steps

longer outputs

larger context use

The result? Your prompt, not your model, becomes the cost center.

That’s why elite teams treat their prompt like an SRE treats a service:

token budgets

latency budgets

cost caps

failure thresholds

If you don’t measure prompt cost as a budget, it will balloon silently.

The shift is realizing:

Your cost structure is encoded in English long before it shows up on your GPU bill.

Shift 3: From “Write Better Instructions” → to “Define Explicit Tradeoffs”

Most people try to “refine the prompt.”

They tweak words. They add detail. They adjust tone. They describe the desired outcome more clearly.

This fixes nothing.

All real AI failures come from unmade tradeoffs, not unclear wording.

Example:

“Be concise but thorough”

“Be friendly but precise”

“Be efficient but safe”

Humans resolve these conflicts by asking:

What matters most? Models don’t ask.

They improvise an equilibrium based on statistical priors.

This is why behavior drifts even when nothing changes.

Prompting is tradeoff design, not instruction writing.

You must explicitly define priority order:

accuracy > completeness

completeness > style

safety > coverage

determinism > creativity

Without explicit priorities, the model invents its own.

Prompt quality = tradeoff clarity, not instruction volume.

Shift 4: From “Prompts Are Part of UX” → to “Prompts Are Part of Architecture”

Most teams treat prompts like UI copy.

They store them in Notion. They change them casually. They optimize them the way marketers optimize landing pages.

This is why systems collapse during scale.

The shift is recognizing:

A prompt is not text. A prompt is a logic surface.

It has interfaces, responsibilities, failure modes, cognitive constraints, versioned behavior, etc.

Prompts are the architecture.

Prompts define the reasoning environment.

If your prompt is editable by anyone with a keyboard, you don’t have a product… you have a live grenade.

Shift 5: From “Prompts Have a Single Interpretation” → to “Prompts Are Probabilistic Systems”

People assume a prompt is like a rulebook.

You give rules → the model follows them.

This is false at scale.

Prompts behave more like probabilistic decision landscapes.

The model explores paths, converges on equilibria, and resolves contradictions stochastically.

This is why:

slight prompt changes = massive behavioral shifts

identical prompts = different outcomes across versions

same inputs = different outputs after fine-tune or update

drift appears even when “nothing changed”

The behavior isn’t random, it’s probabilistic.

The mental shift is moving from “instructional determinism” to statistical determinism: Create an environment where 95% of reasoning paths converge to the same result.

You don’t design text.

You design the space the model reasons inside.

That’s why elite teams architect:

interpreter prompts

reasoner prompts

validator prompts

formatter prompts

refusal rules

priority hierarchies

surface area limits

Your job isn’t to control the words.

Your job is to control the distribution of internal decisions.

What These Five Shifts Really Mean

When you internalize these shifts, you stop thinking of prompting as writing, clever tricks, hacks, “better instructions”, etc.

You start thinking like an AI systems engineer:

Minimize cognitive load

Shrink surface area

Explicitly define tradeoffs

Architect reasoning modules

Control the decision landscape

Govern cost through constraints

Treat prompts like code

Version, review, audit, and delete

And once you do that, you stop producing demos and start producing durable, predictable AI products… the kind users can trust and enterprises can adopt.

Now comes the real test: not reading these techniques, but applying them.

Replace vague instructions with constraints.

Replace long prompts with narrow surfaces.

Replace intuition with structured interpretation.

Replace “fix the wording” with “fix the architecture and here’s how.”

Most teams never make this shift.

If you do, you instantly separate yourself from the crowd.

The next decade of AI belongs to builders who understand systems and build around it!

Choose which side you want to be on.

I highly recommend running the following prompt from various AI chatbots on this article. Here is the prompt:

"Do an in-depth analysis of the content located at:

https://www.productmanagement.ai/p/prompt-engineering

Determine what specific information being presented may be inaccurate or false and indicate why you believe it to be that way. Indicate the specific section where this claim is made. Sections are numbered and there are sub-numbers within these sections. Refer to those where a sub-number is shown. Where possible, provide evidence to back up your claims and preferrably with real-world use-case scenarios. After your analysis has been completed, determine anything that should be added that the authors of the paper overlooked."

This is a great example of how prompts are really product decisions.

The structure, constraints, and defaults matter more than clever wording.

Solid breakdown of what actually makes a system prompt reliable.