The AI Cost Optimisation Playbook Every Product Leader Will Need in 2026

The financial physics behind AI systems and the exact frameworks elite teams use to cut costs 40–90% while improving accuracy.

By Miqdad Jaffer, Product lead @OpenAI.

$29/month does NOT scale when your users start doing 10,000… or 100,000… or 1,000,000 AI actions.

There is a quiet financial crisis unfolding inside every AI team…

Not because people are careless or incompetent, but because AI systems behave in ways traditional product, engineering, and finance teams were never trained to anticipate.

Costs don’t rise steadily the way infrastructure or SaaS usage typically does; they jump, compound, and cascade through the system in unpredictable ways, creating a sense of “invisible leakage” that only becomes obvious once bills cross a painful threshold.

Most leaders feel this long before they understand it:

Inference bills creeping upward every month without clear attribution,

Latency spikes causing sudden drops in conversion,

Prototypes that worked wonderfully in development becoming too expensive to operate at scale,

Teams unable to downgrade models because too much of the system implicitly depends on a specific performance threshold,

CFOs question whether AI is a strategic advantage or an unmanageable cost center.

Yet underneath these symptoms lies a single truth:

AI costs do not scale with user growth.

They scale with system complexity and complexity expands exponentially unless intentionally constrained.

This is the first mental shift product leaders must internalize.

But here’s the uncomfortable truth: You can’t optimize AI costs if you don’t understand how AI actually works at the first-principles level.

You need to know:

how LLMs transform inputs into probabilistic behavior,

how context and retrieval shape system performance,

how latency, routing, and agents add invisible cost multipliers,

and why the same model can cost 5× more based on how you structure the system around it.

Without this foundation, every “cost optimization” becomes guesswork and guesswork is exactly how AI roadmaps collapse in production.

This is why inside our #1 AI Product Management Certification, we don’t teach prompts or hype.

We teach real technical mastery and the enterprise-grade system design needed to build AI products that scale without breaking.

You learn how to go from zero to building production-quality AI systems… with rigor, with confidence, and without the classic mistakes that kill 90% of AI initiatives.

If you’d love to join 3,000+ alumni learning directly from OpenAI’s Product Leader, you can enroll here with $500 off (limited): Click here.

You can also scroll down and read 750+ reviews from product builders across world class companies.

(Side note: prices increase in 2026.)

Now, let’s dive into the guide.

Section 1 — THE FUNDAMENTALS

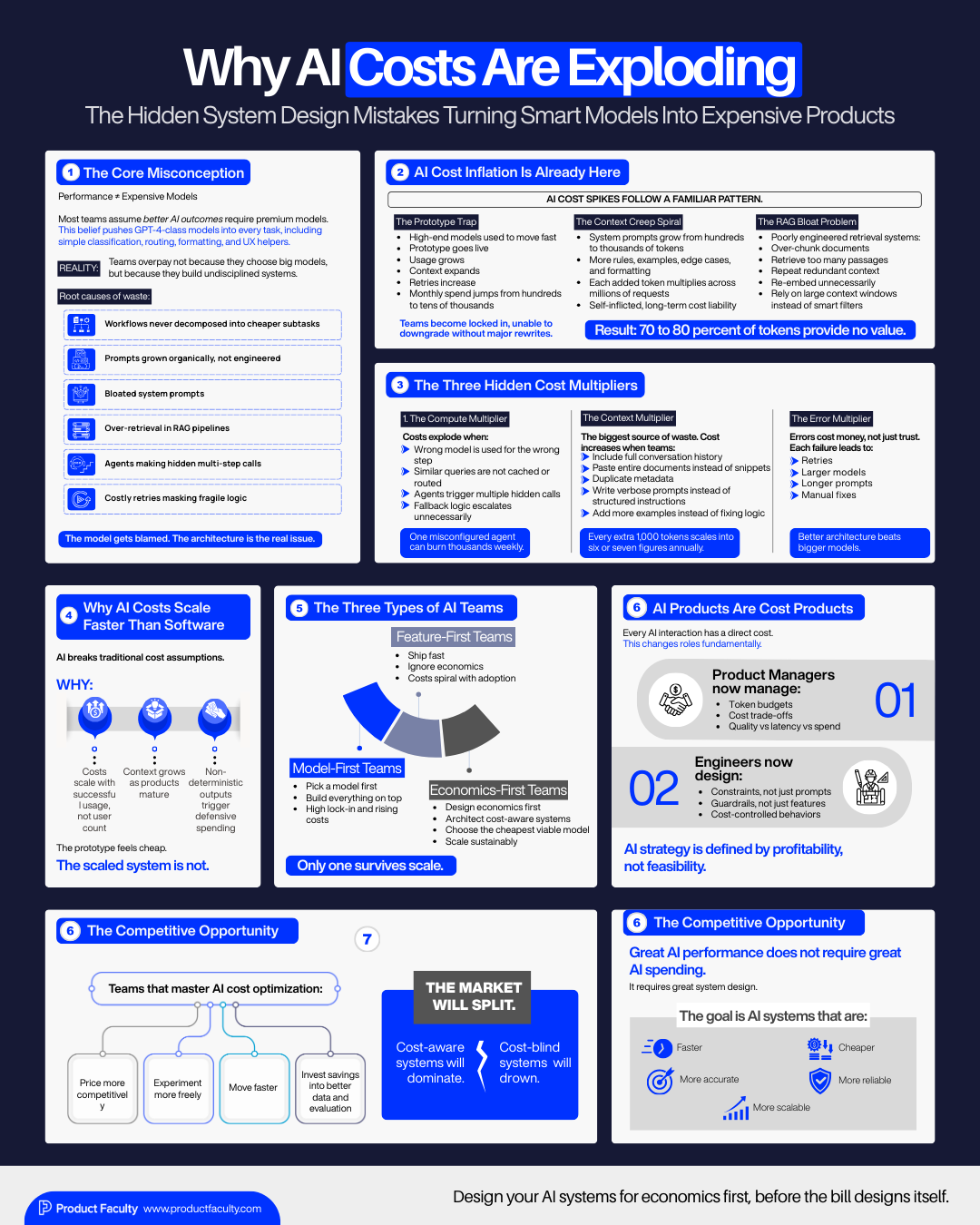

1.1. The Misconception That Fueled Today’s Cost Explosion

Many teams still operate under an intuitive but dangerously incomplete assumption:

“Higher performance requires more expensive models.”

This belief creates a gravitational pull toward using GPT-4-class models for everything: ideation, classification, routing, simple UX helpers, structured transformations, and even tasks that a lightweight open-source model could solve at a tenth of the cost.

The consequence is predictable: teams end up paying premium-model prices for commodity-level tasks, not because the task demands a premium model but because the system was never thoughtfully decomposed in the first place.

Teams don’t overpay because they choose big models.

Teams overpay because they choose undisciplined systems.

Most cost waste originates from:

workflows that were never decomposed into cheaper subtasks,

prompts that grew organically instead of being engineered,

RAG layers that retrieve far more context than necessary,

system prompts that ballooned with every new requirement,

agents that silently call the model multiple times per user action,

fallback chains that mask brittle logic with expensive retries.

The result is almost always the same: the model is blamed, but the architecture is the real problem.

1.2. AI Cost Inflation Isn’t on the Horizon, It’s Already Here

Teams usually discover AI cost inflation the same way people discover credit card debt: slowly, then all at once.

What begins as a harmless prototype using GPT-4 quickly becomes a production system with tens of thousands of daily calls, deeply intertwined logs, dependencies, and user expectations.

Two patterns show up across almost every company I advise:

The Prototype Trap

A team prototypes with a high-end model because it reduces friction and accelerates iteration. They launch. Users love it. Then, silently, the cost curve begins to swell:

the original $200 prototyping bill becomes a $20,000 operational bill,

latency worsens as context windows expand,

usage skyrockets because users rely on the feature more heavily,

retries increase due to edge-case failures,

and within months the team is “locked in” - unable to downgrade without rewriting half the system.

The Context Creep Spiral

Every iteration adds new requirements: tone constraints, safety rules, formatting templates, examples, exception handling, etc.

The system prompt grows from 400 tokens → 2,000 tokens → 4,000 tokens.

That extra context, multiplied across millions of requests, becomes a seven-figure liability… entirely self-inflicted, entirely avoidable.

The RAG Bloat Phenomenon

Retrieval-augmented generation entered the industry as a miracle solution, but poorly engineered RAG layers often account for more waste than the model itself.

Most RAG systems:

chunk documents too aggressively,

retrieve far too many passages,

repeat redundant context,

re-embed on every request,

and rely on large context windows rather than intelligent retrieval filters.

This results in an astonishing scenario: for many products, 70–80% of tokens are unnecessary.

1.3. The Three Hidden Multipliers Driving AI Costs

When I conduct enterprise cost audits, I rarely find a single catastrophic mistake. Instead, I find multiple small inefficiencies that compound: creating a multiplier effect that inflates burn far beyond what the team perceives.

There are three primary multipliers:

1) The Compute Multiplier

Every inference call represents a chain of events: prompt construction, encoding, network traversal, inference, decoding, and sometimes multiple tool calls.

Teams usually optimize one step (the model) while ignoring the others.

This leads to spiraling costs when the wrong model is used for the wrong step, similar queries are not routed or cached, etc.

A single misconfigured agent can quietly burn tens of thousands of dollars in a week.

2) The Context Multiplier

Teams unknowingly inflate cost when they include entire conversation histories rather than distilled memories, paste raw documents instead of selectively retrieved snippets, duplicate metadata, and bunch of other things!

Every additional 1,000 tokens, repeated across millions of calls per month, turns into six- or seven-figure annual waste.

3) The Error Multiplier

AI errors don’t just hurt UX; they burn money.

Every hallucination triggers retries and fallbacks to more expensive models.

Which ultimately means longer context windows for “stability.”

And manual correction work that inflates operational overhead.

Teams often believe they need more examples or a bigger model.

In reality, they need better architecture, confidence scoring, early-exit conditions, routing logic, structured output formats, and domain-specific guardrails.

1.4. Why AI Costs Rise Faster Than Any Technology Before It

Traditional software is inexpensive once built.

SaaS costs follow a neatly predictable curve.

Cloud infrastructure has economies of scale.

But AI systems violate all these assumptions because they bind cost to every single user interaction, not just marginal infrastructure.

Three dynamics make AI uniquely expensive:

Inference costs scale with user success, not user count. A feature that becomes popular automatically becomes expensive.

Context windows expand as products mature. More features → more instructions → more tokens → higher latency.

Every workflow relies on non-deterministic outputs. When accuracy dips, teams compensate with larger models and longer prompts.

This is why early wins in AI often mislead companies.

The prototype is cheap; the scaled system is not.

You’re optimising for what?

1.5. The Three Types of AI Teams (Only One Survives Scaling)

Across hundreds of companies, I’ve found only three operational archetypes:

1) Model-First Teams. They pick a model and build everything on top of it. This creates cost lock-in, inflexible pipelines, and technical debt.

2) Feature-First Teams. They build features before understanding the economics. Everything works beautifully until user demand pushes costs beyond control.

3) Economics-First Teams These are the elite performers. They reverse the order entirely: Design the economics → design the architecture → choose the cheapest viable model → then build the feature.

They scale sustainably because their system is cost-aware at its core, not patched retroactively after numbers begin to hurt.

1.6. The New Reality: AI Products Are Cost Products

This is the sentence most PMs need to hear:

An AI feature is never free. Every click, every query, every step in an agentic workflow costs money… not indirectly, but directly, immediately, and proportionally.

This changes the job of product teams dramatically.

You can consider your PMs as stewards of token budgets and architects of cost-efficient flows.

Your engineers are now:

Writing not just instructions but constraints,

Shaping not deterministic paths but probabilistic boundaries,

Creating not features but cost-controlled behaviors.

AI product strategy is no longer defined by feasibility alone; it is defined by profitability.

1.7. The Opportunity Hidden Inside the Crisis

If this sounds daunting, here’s the good news: companies that master AI cost optimization unlock an advantage that compounds over years.

They can: offer competitive pricing, run more experiments, move faster without financial drag, reallocate savings into better data, better evaluation loops, and more strategic bets, and outperform competitors who simply “throw bigger models” at every problem.

In the next 24 months, we will see a clear separation in the market:

Companies that treat cost as architecture will dominate.

Companies that treat cost as an afterthought will drown.

1.8. The Core Thesis of This Deep Dive

Everything in this newsletter is built around one central idea:

Great AI performance does not require great AI spending.

It requires great system design.

We’re going to explore how to design AI systems that are simultaneously: faster, cheaper, more accurate, more reliable, and more scalable.

Won’t you love that? You’d.

So, let’s dive straight into it.

SECTION 2: The AI Cost Stack: Where Money Actually Burns

Almost every AI team believes they understand their costs, until they actually map them.

What they typically discover is that the model itself is only one slice of the total cost footprint. The real financial pressure comes from the layers surrounding the model: context handling, retrieval architecture, token expansion, agentic loops, error retries, and orchestration logic.

You don’t reduce AI cost by swapping GPT-5.1 for GPT-4.

You reduce AI cost by understanding the entire cost stack and controlling the compounding effects that teams rarely measure.

In this section, we’ll break down the six layers of the AI Cost Stack, explain how each one silently inflates spend, and give you mental models used by great companies.

2.1. Why Most Companies Misdiagnose AI Cost Problems

When a team sees rising inference bills, they instinctively reach for three reactions:

“Maybe we need a smaller model.”

“Maybe we need to call the model less.”

“Maybe we need better caching.”

All three are helpful but incomplete.

The real cause is rarely a single factor. It is almost always the interaction between multiple layers, where inefficiencies compound. Think of it like compound interest — small percentages at each layer create massive downstream increases at scale.

For example:

A bloated system prompt (Layer 2) raises token count.

Poor retrieval logic (Layer 3) adds unnecessary documents to the context.

An overly complex agent (Layer 4) makes multiple calls per task.

A retry loop (Layer 6) doubles or triples cost for failed runs.

When these combine, a “simple $0.04 call” becomes a “$0.40 workflow,” which at enterprise scale becomes a “$4M annual liability.”

This is why we must understand the full stack.

THE SIX-LAYER AI COST STACK

LAYER 1 — Model Costs (The Visible Cost)

What you think you’re paying for… but only 10–20% of total cost in mature systems.

This is the most obvious cost:

the per-token rate of GPT-4o, Claude, Gemini, or your chosen model,

the cost of running open-source models on your own infra,

or the hybrid approach of mixing on-prem and API models.

Most teams believe this layer is where optimization happens.

It is actually where optimization begins. Model pricing is transparent, predictable, and easy to measure, but focusing only here is like trying to lose weight by buying smaller plates.

What truly matters at this layer is:

using the right model for the right task,

decomposing tasks so cheaper models handle the bulk of work,

routing intelligently (cascaded inference),

avoiding premium models unless absolutely necessary,

and running open-source models where latency and control matter more than incremental accuracy.

But again, this is only Layer 1.

The real money burns elsewhere.

LAYER 2 — Token Costs (The Silent Multiplier)

Where 30–60% of cost leaks: unnoticed and unmanaged.

Token cost is where AI systems truly reveal how undisciplined they are. Even if your model price stays constant, token usage can triple due to product decisions that seem harmless in isolation.

There are three categories of token inflation:

1. Input Token Inflation

This comes from:

long system prompts,

verbose instructions,

unnecessary metadata,

overly large retrieval chunks,

full conversation histories repeated in every call.

Teams often discover that each request includes 3–10× more tokens than necessary.

2. Output Token Inflation

Poorly designed prompts lead to:

long rambling responses,

repetitive phrasing,

unnecessary reasoning steps (“think step-by-step”),

verbose safety disclaimers.

3. Hidden Token Inflation

This is what most teams never measure formatting examples, demonstration prompts, few-shot learning examples, chain-of-thought prompts (sometimes >2,000 tokens), invisible intermediate prompts used inside orchestration layers, etc.

Token waste compounds silently, and because tokens are the unit of billing, this layer is one of the most powerful levers for cost reduction.

This is why the best AI teams in the world obsess over context compression and structured prompting.

LAYER 3 — Retrieval Costs (Where Architecture Decides Your Fate)

The difference between a $50,000 annual bill and a $500,000 one.

RAG (retrieval-augmented generation) is extraordinary when engineered well and catastrophic when engineered poorly. The issue is simple:

Most RAG systems retrieve too much, too often, with too little intelligence.

Common mistakes:

Chunk sizes too large → retrieval returns full documents.

Chunk sizes too small → retrieval returns too many small chunks.

No metadata filtering → irrelevant documents flood the context window.

No semantic re-ranking → the model must evaluate 10 irrelevant passages.

No quality scoring → hallucinations increase, leading to retries.

No caching → the same embeddings are produced repeatedly.

Every unnecessary chunk retrieved becomes hundreds or thousands of tokens multiplied across millions of calls.

LAYER 4 — Orchestration & Execution Costs (The Agent Tax)

The layer responsible for 3–15× cost spikes inside agentic workflows.

As companies shift from single-step prompts to multi-step agent systems, they unknowingly introduce a new class of cost multipliers:

1. Multi-step reasoning loops

Agents often call the model repeatedly to: reflect, plan, evaluate, etc.

A “simple” agent workflow might call the model 6–12 times per user request.

2. Tool calls with large contexts

Many tool calls include full prompts, previous steps, etc.

This expands tokens for every step.

3. Overly autonomous agents

Agents without guardrails, confidence bounds, or termination conditions can create runaway loops. One misconfiguration can quietly burn $10,000–$50,000 in a weekend.

4. Poor decomposition

If the task isn’t broken down well, the agent compensates by “thinking more,” which means “spending more.”

This is why the most advanced AI teams build micro-agents: small, single-purpose agents that execute with predictability, minimal tokens, and strict guardrails.

LAYER 5 — Latency Costs (The UX Tax That Becomes an Infra Tax)

Slow systems are expensive systems.

Latency isn’t just a UX problem, it’s an economic problem.

When inference is slow:

more users drop off mid-session (reduced usage = wasted resources),

models retry due to timeout failures (extra cost),

teams compensate by using larger models (higher cost),

products require heavier caching and infra (more cost).

Latency grows with: larger models, larger context windows, etc.

Optimizing latency reduces cost because it forces architectural discipline.

LAYER 6 — Failure & Retry Costs (The Hidden 15–35% of Your Bill)

Where hallucinations become dollars… and sometimes millions.

AI systems fail frequently, and every failure has a cost signature:

1. Model retries: If the output is low-confidence or malformed, the system calls the model again.

2. Fallback to larger models: A common anti-pattern:

Try GPT-3.5 → fail

Retry GPT-3.5 → fail

Escalate to GPT-4 → succeed

Cost = 3× what it needed to be.

3. Human-in-the-loop correction. This is slow, expensive, and often forces the system to overcorrect by expanding instructions to “prevent future errors,” which increases tokens even more.

4. Error cascades in agents. One incorrect step leads to 5–10 subsequent steps trying to fix it. Retries can easily account for 15–35% of total cost, yet almost no teams measure retry inflation as a separate KPI.

The best teams treat “retry cost” as a first-class optimization target.

SECTION 3 — The Four Pillars of AI Cost Optimization

If Section 2 showed where money burns, this section explains how to stop it without: sacrificing accuracy, user experience, or business value.

Most AI teams try to reduce costs tactically: swap models, shorten prompts, add caching, or throttle usage. While these tactics help, they rarely change the long-term economics.

To fundamentally reduce AI cost ( and improve performance) you must redesign the system around four core pillars. These pillars work together, compounding like interest: each one reduces cost on its own, but when applied simultaneously, they reshape the economics of the entire product.

These are the same principles used inside Fortune 50 organizations, high-volume AI products, and agentic systems that process tens of millions of queries per day.

Let’s dive into each pillar deeply.

PILLAR 1 — Context Compression

Reduce 50–90% of tokens without reducing meaning or model quality.

Context is the gravity that shapes AI cost.

If you don’t control context, you don’t control cost.

Simple as that.

Most teams think of prompt length as a writing problem. In reality, it is an architecture problem. One that decides throughput, latency, accuracy, and scalability.

Context compression is not about “cutting things” or making prompts shorter; it is about restructuring information so the model receives only what is necessary for the task, no more and no less.

Here’s how world-class teams achieve this:

1. Hierarchical Context Design

Rather than stuffing everything into one giant prompt, information is layered:

Global rules (rarely change)

Session-level memory (lightweight distilled summaries)

Query-specific context (retrieved on demand)

Structured inputs (schema instead of prose)

This ensures the model sees only the most relevant 2–8% of available information.

2. Structured System Prompts

Long narrative instructions (“Write in this tone… Follow these guidelines… Don’t do X… Make sure to do Y…”) inflate tokens dramatically.

Instead, elite teams rewrite instructions as structured schemas, e.g.:

{

“tone”: “concise, professional”,

“safety”: “no medical advice”,

“format”: [”summary”, “insights”, “actions”],

“rules”: [”no hallucination”, “cite sources”]

}

A 1,200-token narrative compresses to <200 structured tokens with zero loss in performance.

3. Sparse Retrieval (Instead of Dense Retrieval)

Most RAG systems over-retrieve because they rely on embeddings without metadata filtering.

Sparse retrieval improves relevance and drastically reduces context size by:

filtering by metadata before embedding search,

retrieving by semantic intent and structural constraints,

using domain heuristics to prune noisy results.

This often reduces context by 70–90%, increasing accuracy at the same time.

4. Programmatic Summarization

Instead of dumping large docs into context, teams generate ultra-short summaries, distilled insights, etc.

Summaries cost tokens once but save tokens forever.

5. Transformational Compression

This is an advanced technique where instead of “giving the model all the text,” you convert the text into a structure the model understands more efficiently.

Examples:

turning long paragraphs into JSON objects,

converting transcripts into fact tables,

converting tasks into plans.

This transforms 3,000–6,000 tokens of narrative into 150–300 tokens of useful structure.

Impact: Context compression typically reduces cost by 50–90% with higher accuracy because the model receives distilled, relevant, and structured information instead of noise.

PILLAR 2 — Model Right-Sizing

Use the smallest model that meets the quality threshold, not the largest model you can afford.

Most teams use the biggest available model not because they need it but because:

they never decomposed the task into smaller units,

they didn’t test performance thresholds,

they didn’t build a cascading inference system,

they’re afraid of failures and compensate with brute force.

Model right-sizing isn’t about “downgrading the model.” It’s about designing systems where bigger models are used sparingly and intentionally.

Here’s how world-class teams execute this:

1. Decompose the Task

You rarely need GPT-4o for the entire workflow.

Break tasks into substeps:

classification → cheap

extraction → cheap

transformation → cheap

summarization → mid-tier

reasoning → mid/high-tier

generative creativity → high-tier

Most tasks can be handled by Llama, Mixtral, or mid-tier proprietary models.

2. Cascaded Inference

Think of this as a “triage system” for model calls:

cheap model answers first

if confidence is low → escalate to mid-tier

if still low → escalate to premium model

This alone can reduce cost by 70–90%.

3. Model Specialization

Use:

one model for extraction

another for classification

another for reasoning

a dedicated model for code

a fast model for routing

Specialization improves accuracy and reduces cost simultaneously.

4. Use Open-Source Models When Possible

Open-source is not about replacing premium models; it’s about reducing cost for: deterministic tasks, formatting tasks, transformations, etc.

Open-source = control + predictability + cost stability.

PILLAR 3 — Retrieval Efficiency

Design RAG architectures that retrieve precisely what the model needs!

RAG is the biggest cost sink in the industry today.

Poorly engineered retrieval layers cause irrelevant context and higher costs.

Retrieval efficiency is not about reducing retrieval, it is about retrieving intelligently.

Here’s the playbook:

1. Optimal Chunking

Most teams slice documents arbitrarily, which results in:

Too many chunks… too few chunks… chunks that are too large… chunks that contain multiple topics.

Elite teams design chunking around: semantic boundaries, domain heuristics, user intent patterns, content structure.

Better chunks → fewer retrieved → fewer tokens → higher accuracy.

2. Hybrid Retrieval (Sparse + Dense + Metadata)

Combine:

keyword search (sparse),

embedding similarity (dense),

metadata filters (structure).

This reduces noise and ensures only high-signal chunks get through.

3. Re-ranking and Deduplication

Before sending context to the model:

score chunks by semantic relevance,

remove duplicates,

remove near-matches,

prune redundant snippets.

Most companies can cut retrieved context by 70% simply through re-ranking.

4. Local Embeddings and Caching

Instead of embedding documents for every user request: embed once, cache intelligently, store metadata, etc.

This alone saves tens of thousands in embedding API costs.

5. Intent-Based Retrieval

Use a routing model to determine which retrieval pipeline to activate.

Example:

legal queries → legal embeddings

pricing queries → pricing corpus

troubleshooting queries → technical knowledge base

This cuts retrieval load dramatically.

PILLAR 4 — Execution Efficiency

Cut inference cost by optimizing pipelines, reducing waste, and eliminating unnecessary calls.

Execution efficiency is where most cost savings become visible, because it improves latency and reduces cost at the same time.

There are several major categories:

1. Caching

Cache classification outputs, summaries, retrieval results, embeddings, and structured transformations.

Caching transforms frequently used outputs into one-time costs.

2. Batching

Batching reduces cost by:

minimizing repeated network calls,

parallelizing similar requests,

reducing memory overhead.

Great for document processing, multi-query agents, and async workflows.

3. Early Exit Conditions

Build logic like:

“Stop once answer confidence > X”

“Terminate after irrelevant loop detected”

“Abort after 3 steps”

This prevents runaway agent loops.

4. Eliminating Redundant Calls

Most AI systems unintentionally repeat logic twice.

Add guardrails and intermediary checks to avoid unnecessary duplicate calls.

6. Routing Architecture

Use small fast models for basic tasks.

Use big models only when needed.

SECTION 4 — The 10X Cost Framework

Cost optimization is not a one-time effort; it is an evolving discipline, and the companies who master it do so through a repeatable framework.

The “10X Cost Framework” is a method that aligns product teams, engineering teams, and business leaders around a single, unified principle:

Every AI system must justify its cost through measurable value, predictable behavior, and optimized execution… at every step of the pipeline.

The framework is called the 10X Cost Flywheel because once implemented, it becomes self-reinforcing:

better architecture reduces cost,

reduced cost enables more experimentation,

more experimentation improves product quality,

better quality improves adoption,

improved adoption provides richer data,

richer data enables cheaper inference,

and so the system becomes cheaper and better over time.

Below, we break down the seven core components of the flywheel.

1. Precision First: Define the Minimum Acceptable Quality Before Anything Else

Most AI teams start from the question:

“How do we make this as good as possible?”

World-class teams start from the opposite question:

“What is the minimum acceptable accuracy/quality needed to deliver the business outcome?”

This mindset unlocks dramatic cost savings because once the minimum threshold is clear, everything else becomes a constrained optimization problem.

For example:

You may discover that 80% accuracy is enough for routing, and you don’t need GPT-4-level reasoning.

You may learn that 50% shorter outputs still satisfy users.

You may realize that deterministic formatting saves more time downstream than improved reasoning.

Precision-first thinking prevents teams from over-solving problems that don’t require high-end models.

It shifts the question from: “How can we get GPT-4-like quality?” to “What is the cheapest model that achieves the required quality?”

That single shift saves millions.

2. Cascaded Inference: Use the Right Model at the Right Time

This is the most powerful cost-reduction technique in the industry. Instead of sending every query to the expensive model, queries flow through a cascading set of decision gates:

Cheap model handles the bulk of queries

Mid-tier model handles edge cases

Premium model handles complex reasoning only

Fallback model handles critical failures

This approach reduces cost by 70–90%, and more importantly, it increases reliability because each layer is tuned for a specific purpose.

In practical terms:

60–80% of queries can be answered by Llama/Mixtral mid-tier models.

A further 10–25% require medium reasoning (GPT-3.5 class models).

Only 2–10% require GPT-5-level thinking.

Cascaded inference is the difference between paying for a Ferrari to run local errands versus using a scooter for most tasks and saving the Ferrari for the highway.

3. Early Exits & Guardrails: Terminate Low-Value Computation Immediately

One of the hidden truths about AI systems is that models waste enormous compute on tasks that should never have reached them.

Effective systems include guardrails that:

validate the user query,

identify irrelevant requests,

stop runaway loops,

detect when the model has already reached a solution,

eliminate unnecessary retries.

Examples:

If the model already provides a confident answer, don’t run additional agent steps.

If retrieval returns low relevance, abort rather than hallucinate.

If the query matches a cached answer, return it without inference.

If inputs fail schema validation, reject early.

Early exits alone often reduce cost by 20–40%.

4. Agent Decomposition: Smaller Agents Are Cheaper, Faster, and More Accurate

Most people build AI agents like monoliths, one giant flow that does everything.

This leads to: ballooning context, agent loops calling expensive models repeatedly, runaway costs, and unpredictable latency.

Top-tier AI teams do the opposite:

They decompose agents into micro-agents, each responsible for a narrow function:

one agent handles classification,

another agent handles retrieval,

another agent handles fact extraction,

another agent performs reasoning,

another agent formats final output.

Each micro-agent uses the cheapest model suitable for its task.

This decomposition:

reduces token usage,

increases accuracy (specialization reduces errors),

improves debugging,

adds predictable cost ceilings,

eliminates agent loops where the model “thinks” aimlessly.

5. Structured Outputs: Force Predictability to Reduce Downstream Cost

Unstructured output is one of the least understood cost multipliers.

When models answer in free-form text:

downstream systems must parse the content,

errors force retries,

agent loops spend time correcting mistakes,

formatting unpredictability grows token usage,

failures cascade unpredictably.

By contrast, structured outputs (JSON, key-value pairs, XML-like schemas) enforce:

tighter reasoning pathways,

dramatically fewer hallucinations,

predictable downstream processing,

less need for retries or corrective steps.

Structured outputs reduce cost because they reduce variance… and variance is the enemy of cost efficiency.

Even generating two structured sentences instead of ten free-form paragraphs reduces output tokens by 80–90%.

6. Feedback Loop Optimization: Improve the System Iteratively to Reduce Future Spend

Here’s a counterintuitive truth: The biggest cost savings do not come from reducing today’s cost, they come from preventing tomorrow’s cost.

High-scale AI teams create feedback loops where the system continuously learns:

which queries cause the most retries,

which agent steps generate unnecessary calls,

which instructions inflate tokens excessively,

which retrieval chunks are consistently irrelevant,

which model escalations are avoidable,

which user flows create inefficiency.

They then fix the underlying issue: compress the prompt, prune the retrieval pipeline, adjust routing rules, add guardrails, etc.

Over time, this feedback loop compounds, and the system becomes cheaper to operate, more accurate, and faster to respond.

Every mature AI platform eventually becomes “self-optimizing” because the organization builds a culture of continuous performance tuning.

7. Decide Through Economics, Not Curiosity:

A New Operational Mindset for AI Teams

Most AI teams choose models or architectures based on “vibes.”

High-performing AI teams choose models and architectures based on a single lens:

What is the cheapest pathway to achieve the required business outcome?

This mindset forces clarity:

It becomes obvious when a GPT-4 call is unnecessary.

It becomes obvious when a retrieval chunk is oversized.

It becomes obvious when an agent step is redundant.

It becomes obvious when a fallback route is too expensive.

It becomes obvious when an output is longer than needed.

Economic thinking drives engineering discipline and engineering discipline drives cost efficiency.

Once implemented, the system reinforces itself:

Define minimum acceptable precision → choose the cheapest viable model.

Cascaded inference → 80% of traffic handled by cheap models.

Early exits → stop expensive tasks before they happen.

Agent decomposition → small steps + specialized tasks = lower tokens.

Structured outputs → fewer retries and lower variance.

Retrieval optimization → smaller context windows, higher accuracy.

Feedback loops → the system gets better, cheaper, and faster over time.

SECTION 5 — The Token Diet: Reducing Token Usage Without Reducing Quality

Constantly flowing through your system, carrying both value and overhead with every inference. And yet, tokens are the least understood and least measured cost vector in the entire AI industry.

Teams obsess over which model to use, over which agent architecture to deploy, over which embeddings library to pick… but they rarely examine the literal text that the model reads and writes — despite the fact that tokens are the only thing the model charges you for.

This means something both simple and profound:

Every additional word you send to a model is money leaving your account.

Every unnecessary paragraph you include is compounding cost.

Every verbose output you tolerate is a self-inflicted tax.

Yet most teams treat token usage like a natural byproduct of AI, not something to intentionally design.

The world-class teams — the ones who run tens of millions of AI calls per day — treat token management like a discipline. They sculpt prompts, compress context, optimize outputs, and design schemas that transmit the maximum amount of meaning in the minimum amount of text.

This is the principle behind The Token Diet: a systematic framework for reducing 50–90% of token usage without sacrificing clarity, performance, or correctness.

Let’s break down the strategies.

5.1 — Why Token Reduction Is the Highest Leverage Cost Lever

Tokens are not just a cost, they are a multiplier.

For every token you add to the system:

latency increases,

cost increases,

hallucination risk increases,

retries become more likely,

context windows fill faster,

orchestration complexity expands.

Meanwhile, every token you remove:

accelerates inference,

improves quality by reducing noise,

shrinks prompts to their semantic core,

enables smaller models,

reduces context overflow,

supports better user experience,

dramatically lowers cost.

A reduction of 1,000 tokens in a system processing 20 million monthly requests is often equivalent to hundreds of thousands of dollars saved per year.

5.2 — Six Classes of Token Waste (and How to Eliminate Them)

AI systems typically suffer from six categories of token waste. Each must be treated differently because each arises from a different part of the pipeline.

Let’s examine the six categories.

CATEGORY 1 — Prompt Bloat (System + Instruction Tokens)

Your system prompt is likely 3–10× larger than necessary.

Teams often start with a simple instruction, and over months, slowly accumulate: tone guidelines, safety instructions, and other related things.

Suddenly the prompt is no longer 200 tokens — it’s 2,000–4,000.

The model now spends a majority of its compute simply reading your instructions every time.

Solutions:

Convert narrative instructions into structured schemas (10× more efficient).

Create modular prompts using reusable “prompt blocks.”

Move static instructions out of the runtime prompt into configuration layers.

Use hierarchical prompting (global rules + task rules + context).

Replace verbose language with declarative constraints.

Example: Instead of: “Please ensure that your writing is clear, concise, professional, and avoids making assumptions unless explicitly stated…”

Use:

“tone”:

“concise, professional”,

“rule”: “no assumptions”.

Structured tokens compress 10–20 lines into a handful of key-value pairs.

CATEGORY 2 — Context Overload

The model is reading far more than it needs.

Most teams send long histories, full documents - you name it!

Solutions:

Use condensed session memory (150–200 tokens instead of 2,000).

Extract only relevant parts of retrieval chunks.

Use context summarization before sending content to the LLM.

Split user queries into structured components.

Use delta prompts (send what changed, not the whole conversation).

Introduce “memory abstraction layers” that compress context historically.

CATEGORY 3 — Output Bloat

LLMs over-explain, over-elaborate, and produce unnecessary verbosity.

Most models default to step-by-step reasoning, self-referential caveats, etc.

This wastes tokens and creates slow, costly, often redundant responses.

Solutions:

Force structured outputs (JSON, bullet forms, tables).

Remove chain-of-thought unless absolutely necessary.

Use output-length constraints (“max 2 sentences per field”).

Introduce output compression guards.

Use a smaller, faster model to compress the premium model’s output.

CATEGORY 4 — Example Inflation (Few-Shot Bloat)

Many teams include:

several examples for formatting,

demonstration prompts for reasoning,

edge-case examples,

narrative explanations.

This quickly becomes hundreds or thousands of tokens.

Solutions:

Switch from few-shot prompting to schema prompting.

Use zero-shot with structure (models now handle this extremely well).

Use synthetic training instead of including examples in the runtime prompt.

Move examples into retrieval — not system prompts.

CATEGORY 5 — Hidden Tokens (Pre + Post Processing)

There are tokens in your system that you don’t even realize you’re paying for like tool call scaffolding, agent reflection steps, evaluation prompts, etc.

These are invisible to most teams.

Solutions:

Audit tool calls (they often have hidden prompts).

Replace verbose agent reflections with structured reasoning fields.

Enforce a “token budget” for every agent step.

Minimize diagnostic verbosity in production.

CATEGORY 6 — Multi-Step Process Inflation

Agents call models multiple times per request. Each step multiplies token usage.

Solutions:

Use micro-agents (each step uses a minimal prompt).

Introduce caching between steps.

Combine compatible steps into single calls.

Use stateful memory to avoid repeating context at each step.

5.3 — Context Compression Techniques Used by Elite Teams

The best AI organizations reduce token usage so aggressively that the model sees only the 5–10% of information that matters.

Here are advanced techniques they use.

Technique 1 — Structural Prompting

Convert instructions into structured schemas:

{

“goal”: “...”,

“tone”: “...”,

“rules”: [”...”, “...”],

“format”: {”summary”: “”, “insights”: “”, “actions”: “”}

}

This compresses 300–400 tokens into 30–60.

Technique 2 — Hierarchical Context Layers

Separate context into:

global rules (rarely change),

project-level instructions,

conversation memory,

task-specific inputs.

Send only the minimum required layers to the LLM.

Technique 3 — Semantic Compression

Instead of sending entire chunks, use a lightweight model to compress them:

Input: 900 tokens

Output: 90 tokens

Accuracy: higher

Cost: dramatically lower

Latency: significantly faster

Technique 4 — Relevance Scoring

Only pass text where:

semantic score > threshold,

metadata matches user intent,

redundancy score < threshold.

This eliminates 70% of tokens in many systems.

Technique 5 — Delta Prompts

Instead of resending the entire conversation:

Only send what changed since the last turn.

This is especially useful in agents and chat interfaces.

Technique 6 — Memory Compression (Stateful Summaries)

Use short, evolving summaries:

{

“session_summary”: “...”,

“key_facts”: [”...”, “...”],

“pending_goals”: [”...”]

}

Replaces thousands of tokens with a few dozen.

5.4 — Output Compression: Do Not Let Models Ramble

Most outputs can be generated in 20% of the tokens, 10% of the time and with 2–3x the clarity…

…if you instruct the model correctly.

Output compression templates:

“Answer in 3 bullet points, max 8 words each.”

“Return JSON with fields: ‘result’, ‘confidence’, ‘next_step’.”

“Produce a 1-sentence summary + 3 action items.”

Contextual compression examples:

Remove disclaimers

No chain-of-thought

No introductions

No transitions

No filler phrases

This improves UX while slashing cost.

5.5 — The Token Diet in Action: A Real Example

A global enterprise used a 2,500-token system prompt for an internal agent.

After applying the Token Diet:

system prompt reduced to 340 tokens

average input reduced by 1,200 tokens

output reduced by 300 tokens

retries dropped due to reduced hallucination

latency improved by 55%

total monthly cost dropped by 78%

No model changes were made.No architecture changes were made.

Only token discipline.

5.6 — The Principle That Connects Everything in This Section

If there is one idea you take from the Token Diet, let it be this:

More text does not produce better answers.

Better structure produces better answers.

When you compress, prune, and structure, you create clarity, predictability, and efficiency.

And these qualities reduce cost and improve accuracy at the same time.

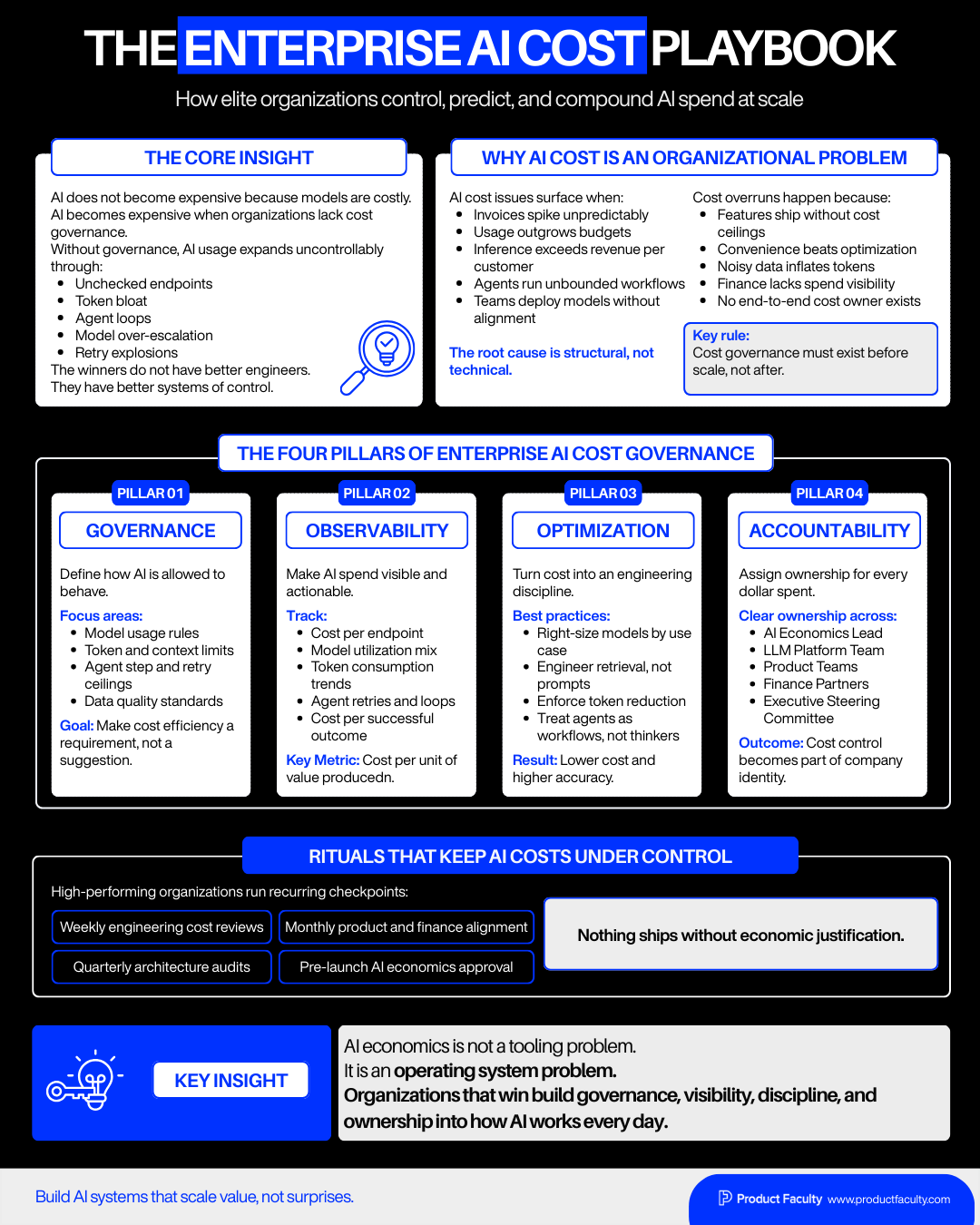

SECTION 6 — The Enterprise AI Cost Playbook

How world-class organizations engineer predictable, controlled, and efficient AI spending that compounds over time.

AI systems do not become expensive because models are inherently costly. They become expensive because organizations fail to implement a governance layer that aligns architecture, operations, finance, and product strategy around the economic realities of AI-driven computation.

In the absence of governance, AI grows like an unchecked organism — spawning new endpoints, expanding context windows, accumulating prompt bloat, creating agent loops, triggering runaway retries, and escalating to larger models without oversight.

Organizations that treat AI as “just another software feature” quickly discover that AI behaves nothing like traditional software. It is probabilistic, resource-intensive, compute-driven, and economically sensitive to decisions that seem innocuous at small scale but catastrophic at scale.

The companies that succeed do not win because they have the best engineers.

They win because they have the best systems of control.

This section explains how they build those systems.

6.1 — Why AI Cost Problems Are Organizational Problems, Not Technical Problems

Most executives first notice AI cost when:

invoices spike unpredictably,

usage scale outpaces budgeting,

inference costs exceed revenue per customer,

agents run unbounded workflows,

or different teams run models without alignment.

By the time cost becomes visible, the underlying issue is rarely technical.

It is structural.

AI cost overruns emerge because:

product teams ship features without cost ceilings…

engineering teams choose convenience over optimization…

data teams feed noisy inputs into models…

finance lacks the observability tools to forecast spend…

no one owns cost accountability end-to-end…

and there is no standardized review process for new model endpoints.

The single biggest illusion in enterprise AI is the belief that “engineers will optimize cost later.”

Later never arrives.

Cost governance must exist before scale, not after.

6.2 — The Four Pillars of Enterprise AI Cost Governance

Elite organizations operate around four pillars:

Governance (Policies & Guardrails) — Define how AI is used.

Observability (Dashboards & Monitoring) — Make costs visible and actionable.

Optimization (Architecture & Engineering) — Continuously reduce cost drivers.

Accountability (Rituals & Ownership) — Assign responsibility for every dollar spent.

Together, these pillars create an operating system that stabilizes cost regardless of how much usage grows.

Let’s explore them in detail.

PILLAR 1 — GOVERNANCE: Define How AI Is Allowed to Behave

Organizations need explicit, enforceable policies that constrain AI usage before it reaches production.

1. Model Governance Rules

These rules define:

which models are allowed for which use cases,

which tasks require premium models,

when teams must default to cheaper models,

maximum model escalation limits,

Example: “Any endpoint exceeding 2,500 tokens or using GPT-4 requires architectural review.”

2. Prompt and Context Governance

Rules around maximum system prompt length, maximum context window usage, formatting requirements, etc

Example: “No endpoint may retrieve more than 5 chunks without explicit approval.”

3. Agent Governance

Define step limits, tool-call limits, retry thresholds, micro-agent decomposition standards, fallback logic requirements.

4. Data Governance

AI cost is highly sensitive to input quality.

Policies must enforce data cleaning standards including noise reduction requirements, semantic chunking standards, and metadata tagging, etc.

Governance is about shaping incentives.

It ensures cost efficiency is a requirement, not a “nice-to-have.”

PILLAR 2 — OBSERVABILITY: Make AI Spend Transparent

Organizations cannot optimize what they cannot see.

AI cost needs the same observability discipline as cloud infrastructure.

The minimum dashboard suite includes:

A. Cost Per Endpoint

This shows which APIs or product features consume the most spend.

Track cost per request, cost per 1,000 tokens, and total monthly cost per endpoint.

B. Model Utilization Dashboard

Shows distribution across small models, mid-tier models, premium models, and specialized models.

Goal: reduce usage of expensive models as a percentage of total inference.

C. Token Consumption Dashboard

Track tokens for:

prompt tokens,

completion tokens,

retrieval input size,

system prompts over time,

growth in prompt complexity.

Token inflation is often a silent cost killer.

D. Agent Diagnostics

Monitoring number of steps per agent, number of retries, loop frequency, fallback escalation counts, failure-to-success ratios.

This identifies agent workflows that require immediate redesign.

E. Cost per Successful Outcome

The gold-standard KPI.

It answers: “How much does it cost us to produce one unit of value?”

This shifts the organization from vanity metrics (number of calls) to economic metrics (value per dollar spent).

PILLAR 3 — OPTIMIZATION: Create an Engineering Culture of Efficiency

This pillar turns cost from an afterthought into an engineering discipline.

Elite organizations institutionalize optimization in four ways:

Optimization Practice 1 — Right-Size Every Model

Teams must justify:

why they use a premium model,

why token windows are large,

why retrieval is broad,

why context is uncompressed.

This turns model choice into a strategic decision, not a default.

Optimization Practice 2 — Retrieval Engineering as a Core Competency

Retrieval is the hidden engine of AI cost.

Organizations invest in chunking strategy, metadata schemas, hybrid search.

This reduces cost while improving accuracy.

Optimization Practice 3 — Token Diet Enforcement

Every prompt, model, and endpoint is reviewed for token waste:

verbose system instructions,

redundant examples,

excessive context,

over-elaborate outputs,

uncontrolled chain-of-thought.

Optimization Practice 4 — Agent Engineering Discipline

The organization treats agents as controlled workflows, not autonomous thinkers.

Core standards include micro-agents, hard ceilings on reasoning, and constrained schema-based planning.

This prevents runaway inference loops.

PILLAR 4 — ACCOUNTABILITY: Assign Ownership for Every Dollar Spent

No optimization survives without accountability.

Companies must assign explicit ownership for AI economics.

Key roles include:

1. AI Economics Lead

Owns the cost model, dashboards, and financial governance.

2. LLM Platform Team

Responsible for routing logic, optimization frameworks, model hosting, etc.

3. Product Teams

Accountable for cost per user, cost per workflow, and cost per outcome.

4. Finance Partners

Forecast AI spend and compare against revenue projections.

5. Executive Steering Committee

Sets strategic boundaries:

which capabilities justify premium cost,

which require efficiency-first architecture.

This ensures AI cost governance is not optional — it is part of organizational identity.

6.3 — The Organizational Rituals That Keep AI Costs Under Control

Great AI organizations create rituals — recurring meetings and checkpoints — that enforce discipline.

1. Weekly Cost Review with Engineering

Review spikes, anomalies, costliest endpoints, agent loops, and token consumption trends.

2. Monthly Product–Finance Alignment

Evaluate cost-per-customer, unit economics by segment, AI gross margin, cost dilution over time, etc.

3. Quarterly Architecture Review

Revisit model selection, routing logic, retrieval stack, context pipelines, token budgets.

4. Pre-Launch AI Economics Check

Before launching any AI feature check: project cost-per-request, expected load, model choice maturity, fallback logic, guardrails.

Nothing ships without economic justification.

These rituals prevent surprises.

The New Discipline of AI Cost Architecture

When you zoom out across everything we’ve covered: the layered cost stack, the architectural chokepoints, the retrieval economics, the orchestration flaws, the agent cost explosions, the forecasting model, the routing strategies, the token diet, the guardrails… a single truth emerges:

AI cost is no longer an accident.

It is a discipline.

And the companies that treat it as a discipline will dominate the next decade.

Most teams believe they have a “model problem”.

But as you’ve seen across this deep dive, they really have:

a retrieval design problem,

a context bloat problem,

a routing immaturity problem,

an orchestration inefficiency problem,

a lack of reasoning constraints problem,

and an absence of economic thinking problem.

The model is simply a mirror reflecting the quality of your architecture.

The companies that scale AI profitably aren’t the ones with the cheapest model or the biggest GPU cluster.

They are the ones who understand the financial physics of AI systems.

Noise compounds. Tokens accumulate. Agents recurse.

And once you understand these dynamics, cost stops being something you hope to control and becomes something you engineer.

This is the turning point.

You stop thinking in terms of: “How much does GPT-5 cost?” and start thinking in terms of:

“How do we design a workflow that makes GPT unnecessary 85% of the time?”

You stop asking: “Why did our bill spike last month?” and start asking: “Which upstream decisions created downstream token inflation?”

You stop trying to cut cost by reducing quality and start learning how to reduce cost by improving architecture: the paradox that only expert teams understand.

Because here is the deeper truth:

Cost optimization is not the opposite of performance.

Cost optimization enables performance.

Cheap systems are fragile.

Efficient systems scale.

That’s why the best AI teams in the world converge on the same mindset:

They design systems, not features.

They optimize flows, not invoices.

They forecast behavior, not spend.

They architect for constraint, not experimentation.

And in doing so, they build AI products that are fast, precise, predictable, and affordable.

This entire deep dive was designed to give you the same lens.

Because the next generation of AI products will not be won by whoever has the biggest model budget… but by whoever has the best cost architecture.

The companies that internalize these principles will ship faster, scale cheaper, retain margin, out-innovate competitors, and build AI systems that survive beyond hype cycles.

The ones who ignore them will drown in their own inference bills.

This deep dive ends here, but your real leverage begins now.

Once you see AI systems through the lens of cost architecture, nothing about how you design, build, or scale AI will ever be the same.

And that’s the point.

Thanks for Reading The Product Faculty’s AI Newsletter.

What else topics you’d like us to write deep dives on?

Feel free to comment.

The cascaded inference piece is brillaint tbh. We ran into this exact problem where every query was hitting GPT-4 for tasks that could've been handled by way cheaper models. The breakdown of the six-layer cost stack really clarifies where the money actually goes, especialy the retrieval inefficiency part. In my experience, RAG systems are the most underestimated cost multiplier, like retrieving 10 chunks when 2 would do the job creates this silent burn that nobody notices until the bill comes. The idea that cheaper systems are fragile but efficient systems scale is kinda the whole point here.

This playbook highlights a truth many teams miss: AI cost isn’t just about model pricing — it’s about how the entire system is structured and how inefficiencies can compound unnoticed. Optimization isn’t a hardware problem or a “just use a smaller model” trick — it’s an architecture discipline that spans context, retrieval, agent loops, error retries, and token management, and only by understanding those interactions can you build systems that are both performant and economic at scale.