OpenAI’s Product Leader Shares 3-Layer Distribution Framework To Win Mind & Market Share in the AI World

Features get cloned. Models commoditize. But distribution compounds. This is your definitive guide to AI distribution: from GTM wedges to PLG loops to moat flywheels.

The Forgotten Battlefield: Why Distribution Is the Only Moat Left

Every product wave has its myth.

In the early days of SaaS, the myth was that “features win markets.” Build a product with enough capability, and adoption would follow.

For mobile, the myth was that “design wins,” beautiful apps rise above the noise.

In the AI era, the myth is already clear: many believe that “models win.”

Whoever has the best model will win the market.

That belief is not just wrong. It’s dangerous.

Because, as we’ve learned in our previous newsletter deep dives, models commoditize. Every 90 days, the next release from OpenAI, Anthropic, or Google wipes out the advantage of the one before it. The cost curve shifts, capabilities improve, and suddenly the moat you thought you had disappears overnight.

In AI, your features will be cloned, your models will be overtaken, and your “unique capability” will be commoditized faster than in any other wave of technology.

We spent several hours discussing this with our guest.

The only thing that endures, the only battlefield that can’t be taken away by an API update, is distribution.

But here’s where most PMs and product leaders trip up: they treat distribution as a marketing problem. Something you figure out after the product is built.

“Let’s launch a Product Hunt.”

“Let’s run some paid ads.”

“Let’s sign a few influencer deals.”

That mindset is fatal in AI. Distribution is not something you tack on. Distribution is the product now.

It is the set of design choices, wedges, loops, and moats that determine not just how users show up, but whether every new user compounds value or erodes your economics.

Get it wrong, and you risk burning money and becoming irrelevant.

Think about Perplexity.

On the surface, it’s “just another LLM-powered search.” But the brilliance wasn’t just in their retrieval-augmented generation. It was in how they positioned distribution: a wedge into the information workflow with transparent citations. That choice made it sharable, trustable, and viral.

Their distribution engine wasn’t ads, it was users themselves using Perplexity answers as sources in Slack threads, blog posts, and research decks. Distribution was baked into the product.

Or take Midjourney.

They could have launched as a standalone site like every other AI image generator. Instead, they built inside Discord.

That wasn’t a UX accident, it was a distribution wedge: every image created was public by default, every prompt was social, and every user became a node of viral growth.

Or consider Figma AI.

They didn’t hold a flashy AI launch day. They quietly tucked AI into the exact moments designers already struggled: mockups, auto-layout, copy tweaks. The distribution wasn’t about reaching new users, it was about embedding deeper into the workflows of their existing user base.

That subtle distribution choice meant their AI didn’t need a campaign to spread; it was instantly useful inside a workflow millions already lived in.

Remember: distribution is the real battlefield. Not who has the flashiest demo. Not who fine-tuned the model best.

The winner is the one who designs distribution so well it compounds faster than commoditization can catch up.

We’ll unpack it all today with our guest, Miqdad Jaffer, Product Lead at OpenAI. This might be one of the best, if not the best, guest posts we’ve had in this newsletter.

Side Note: If you want to go beyond just distribution and master how to build enterprise level AI Products from scratch from OpenAI’s Product Leader, then our #1 AI PM Certification is for you.

3,000+ AI PMs graduated. 750+ reviews. Click here to get $500 off. (Next cohort starts Jan 27)

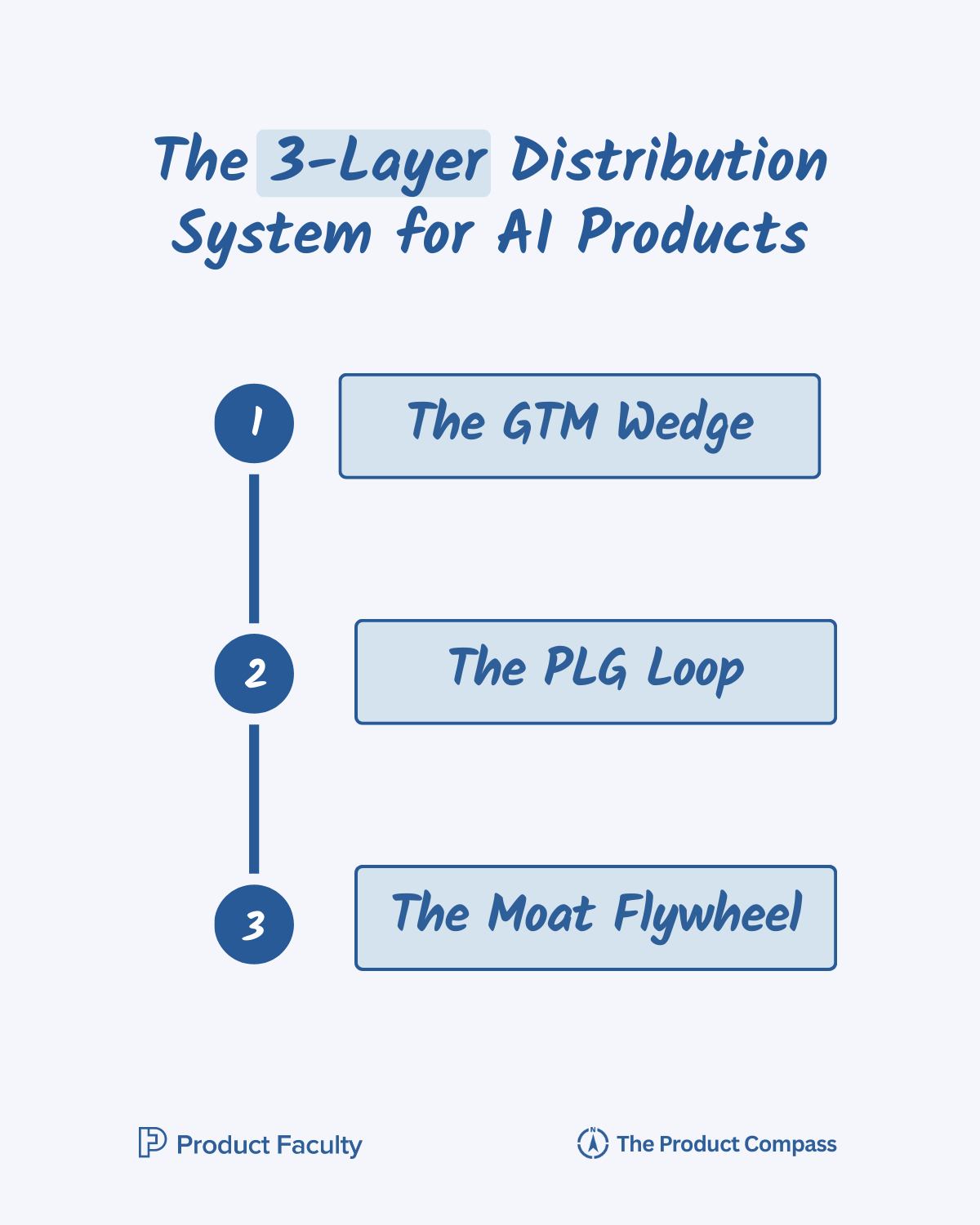

The 3-Layer Distribution System for AI Products

Distribution is not a single act. It’s a system with three layers:

Layer 1: The GTM Wedge: How you enter. The precise vector that gets you into the workflow or conversation without being crushed by giants or drowned in noise.

Layer 2: The PLG Loop: How you compound. The viral, collaborative, or data-driven feedback loops that ensure every new user doesn’t just show up, but makes the product stronger, cheaper, or more valuable for the next.

Layer 3: The Moat Flywheel: How you defend. The structural lock-ins—data, workflow, or trust that ensure competitors can’t simply clone your wedge and ride your loops.

Most PMs get stuck on a single layer. Some obsess over the GTM wedge (“we just need a killer launch”). Others fixate on the PLG loop (“let’s engineer a viral hook”). A few jump straight to the moat (“we’ll build a data flywheel eventually”).

The truth: you need all three.

Without the wedge, no one notices you.

Without the loop, you bleed cash with every new user.

Without the moat, your users churn the moment a cheaper clone shows up.

The companies that define decades are the ones that deliberately design all three layers.

Next, we cover:

I. Layer 1: Why the GTM Wedge Matters More in AI Than in SaaS

II. Layer 2: The 7 PLG Loops for AI Products

III. Layer 3: The Three Defensible Moats in AI Distribution

IV. 6 Laws of AI Distribution Based on 6 Case Studies

V. The 7-Step Distribution Strategy Playbook for AI PMs

VI. 9 Advanced Distribution Tactics (What Billion-Dollar Founders Do Differently)

VII. Conclusion

Let’s dive in.

I. Layer 1: Why the GTM Wedge Matters More in AI Than in SaaS

In traditional SaaS, you could afford to launch broad. If you built a project management tool, you could pitch “better collaboration” and still carve out space, because marginal costs trended toward zero, and even lightweight differentiation like integrations or UI design gave you breathing room. AI doesn’t give you that luxury.

Here’s why:

Costs punish vague wedges. Every time a user queries your AI, you pay for it. If your wedge isn’t tightly defined, you’ll attract casual, low-value users who burn GPU minutes without compounding into retention, data, or referrals. You’ll literally bleed money by the click.

Commoditization collapses broad positioning. “AI writing” sounds exciting until OpenAI drops a better base model for free. Suddenly, your broad wedge dissolves overnight because your story wasn’t anchored in a defensible doorway.

Speed compresses entry windows. In SaaS, you had years to refine your wedge before a competitor caught up. In AI, that window is measured in months, sometimes weeks. The wedge must hit hard and immediately anchor you into a workflow or community before clones flood the market.

In other words: your wedge is not just your entry, it’s your survival mechanism.

Advanced Characteristics of a Strong Wedge

When you evaluate wedges for AI products, you need to look for five deeper traits beyond the basics:

Asymmetry of Pain vs Cost. A great wedge solves a pain point that feels disproportionately big to the user, but is disproportionately cheap for you to deliver. Meeting notes look like a small wedge, but the pain (hours wasted writing summaries) is massive, while the solution (record → transcribe → summarize) can be run cheaply with smaller models. That asymmetry is gold.

Proof on First Use. You don’t get 30 days of trial in AI. Users need to see value in 30 seconds. A wedge must deliver a “wow” immediately, not in the fourth week of onboarding. That’s why “AI notes” works but “AI project productivity” doesn’t. The latter is too abstract to validate in a single shot.

Obvious Storytelling Handle. A wedge needs a story so crisp it spreads itself. “AI legal contract reviewer” is sticky. “AI enterprise workflow optimizer” is vague. The sharper the language, the easier the wedge travels through word-of-mouth, Slack threads, and LinkedIn posts.

Expansion Optionality. The wedge must be narrow to land, but broad enough to expand later. Grammarly started with spelling corrections (narrow, painful, obvious) but expanded into tone adjustments, rewriting, and now generative writing. The wedge was tight, but the expansion surface was huge.

Resistance to Immediate Displacement. Ask yourself: if OpenAI launched this as a free button tomorrow, would we still have a reason to exist? If yes, you’ve got a wedge. If not, you’re just a demo.

Wedge Examples

To make this more concrete, let me highlight wedges that don’t usually get discussed, but perfectly illustrate the principle:

Perplexity’s “Citations by Default”. Their wedge wasn’t “better search.” It had trustable answers with citations. A tiny design decision that created a defensible wedge into researchers, analysts, and power users who needed credibility. Google or OpenAI could have done it, but they didn’t. That was the doorway.

Copy.ai’s “Marketing-Specific Templates”. Instead of going broad like Jasper, Copy.ai leaned into one wedge: marketers who wanted templates for blog posts, ads, and emails. Not just “AI writing” but “AI writing for marketing use cases.” That wedge created an entry into teams where ROI was measurable.

Runway’s “Editor-First Tools”. Runway didn’t wedge with “AI video.” They targeted professional editors and filmmakers with tools like background removal and timeline automation. Those problems were painful, frequent, and measurable. They weren’t trying to win the entire creative market at launch, just the pros who had real budgets and clear workflows.

Each of these wedges wasn’t about showing “AI magic.” They were about picking a very precise doorway into a very specific user base and anchoring themselves before competitors could react.

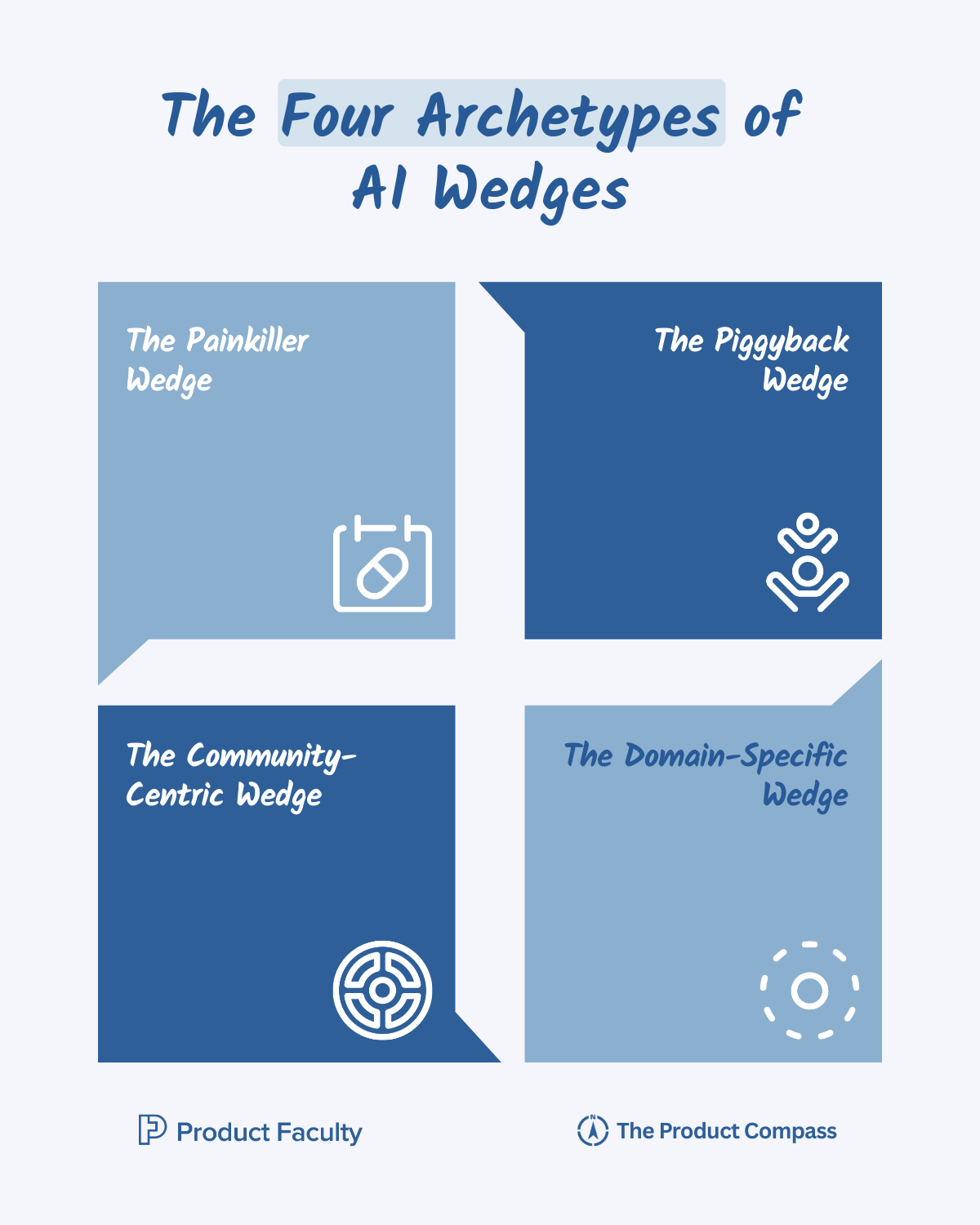

The Four Archetypes of AI Wedges

Over years of watching AI products launch, scale, and collapse, I’ve noticed a recurring pattern: the ones that survive almost always enter through one of four archetypal wedges. These aren’t “categories” in the abstract sense; they’re practical distribution doorways that make or break early adoption.

If your product didn’t take off, nine times out of ten it’s because you picked a wedge that was too broad, too expensive, or too easily cloned. Get the wedge wrong, and no amount of downstream GTM or PLG magic can save you. Get it right, and you buy yourself the most precious commodity in AI: time to expand before the giants notice.

Archetype 1: The Painkiller Wedge

The most obvious, and often the most effective, is the painkiller wedge. This is when you attack one repetitive, universally hated task and remove it so completely that users feel immediate, almost physical relief.

Granola is the classic example: it didn’t brand itself as “AI productivity,” which would have been too vague and too crowded. Instead, it picked one specific job every knowledge worker loathes: writing meeting notes. The pain was high-frequency (every day), high-friction (always distracting from the real meeting), and high-visibility (everyone knows when notes are missing or late). By solving this with near-instant accuracy, Granola didn’t need a big marketing budget; its users evangelized it because the relief was obvious on first use.

The power of a painkiller wedge is that you rarely need to educate users. They already understand the pain and immediately recognize the value. But the danger is commoditization: if the wedge is too shallow, competitors can copy it overnight. Which means you must either move quickly to stack moats (data, distribution, trust) or design the wedge in a way that naturally expands into adjacent workflows before clones catch up.

Archetype 2: The Workflow Piggyback Wedge

The second archetype is the workflow piggyback wedge. Instead of convincing users to adopt something new, you ride the momentum of tools and habits they already have. This works because users don’t want to “learn AI.” They want to keep doing their job, but faster and easier.

Figma AI nailed this by quietly slipping into the design flow with auto-layouts, copy tweaks, and mockup generation. Designers didn’t have to leave the canvas, didn’t have to open another tab, and didn’t even need to change their mental model. AI was simply there, augmenting familiar steps. Adoption felt frictionless because it piggybacked on muscle memory.

The brilliance of workflow piggybacking is that it feels invisible. The risk, however, is platform dependency. If you’re a plugin or extension, you’re always one API change away from irrelevance or worse, from the host platform simply building your wedge natively. The way to mitigate this is to use piggybacking as a short-term wedge but expand into standalone surfaces as soon as you prove traction.

Archetype 3: The Domain-Specific Wedge

The third, and in my opinion one of the most underrated, is the domain-specific wedge. This is where you go deep into a vertical where general-purpose AI is unreliable, and you build trust by delivering precision where others fail.

Harvey is the poster child here. Instead of building yet another “AI legal assistant,” they attacked one high-value, high-risk job: contract review in mergers and acquisitions. It’s repetitive, it’s expensive, and it’s riddled with nuance that general LLMs consistently miss. By going deep into that narrow but lucrative vertical, Harvey built credibility with top firms and gained access to proprietary workflows and datasets that strengthened their moat.

The reason this wedge works is simple: in most industries, generic AI is not good enough. It hallucinates, misses context, or fails compliance. Domain-specific wedges win because they encode expertise that general-purpose models cannot replicate. But the trade-off is scale: the narrower the wedge, the harder it is to grow beyond the initial vertical unless you’ve planned your expansion path from day one.

Archetype 4: The Community-Centric Wedge

Finally, there’s the community-centric wedge, which is less about solving an individual pain point than about turning the product into a cultural engine. This wedge works when outputs are inherently visible, remixable, and social, so every new user attracts the next wave of users.

Midjourney exemplifies this. By forcing prompts and outputs into public Discord channels, they transformed individual usage into collective spectacle. Every generated image wasn’t just an output, it was a marketing asset that lived in the community. The network effects compounded: users learned by watching others, experimented with new styles, and competed for recognition. Midjourney didn’t spend millions on ads; the community was both the product and the distribution channel.

The upside of community wedges is explosive virality. The downside is fragility: without strong curation, communities collapse into spam, and without clear incentives, creators drift away. To make this wedge sustainable, you must treat the community as a first-class product surface, not an afterthought.

Why Most Wedges Fail

If wedges are so powerful, why do most AI products still flop?

Because PMs confuse “feature novelty” with “distribution entry.”

Here are the three killers I’ve seen firsthand:

Going Broad Instead of Sharp. “We do AI design” sounds big, but it’s actually weak. Compare that to “We fix auto-layout pain in Figma.” The sharper wedge wins because it cuts deeper and faster.

Chasing Demos, Not Distribution. A demo can go viral on Twitter, but it doesn’t embed in workflows. Jasper chased viral demos (“AI can write anything!”) while Copy.ai anchored itself in marketing templates. Guess who sustained longer.

Ignoring Cost in the Wedge. A wedge that costs $5 per query might impress in a demo, but it’s not sustainable at 10,000 users. The wedge must be cheap enough to validate without bankrupting you. This is why I tell teams: model cost-per-wedge before you launch, not after.

The Wedge Finder Canvas

Workflow Mapping → What’s the full workflow the user runs today? Write every step.

Friction Heatmap → Where are the high-frequency, high-pain moments? Circle them.

Obviousness Test → Which of those could deliver value in <30 seconds? Mark those.

Defensibility Stress-Test → Which circled points survive the “OpenAI test”—If OpenAI built your exact feature and made it free, would you still be able to win?” Keep only those.

Narrative Handle → Write the 3–5 word story you’d put on a slide. If it takes a sentence, it’s too vague.

Do this exercise, and you’ll walk out with one wedge that is actually distribution-worthy.

So far, we’ve covered:

I. Layer 1: Why the GTM Wedge Matters More in AI Than in SaaS

Next, you’ll learn:

II. Layer 2: The 7 PLG Loops for AI Products

III. Layer 3: The Three Defensible Moats in AI Distribution

IV. 6 Laws of AI Distribution Based on 6 Case Studies

V. The 7-Step Distribution Strategy Playbook for AI PMs

VI. 9 Advanced Distribution Tactics

VII. Conclusion: The Distribution Mindset Shift

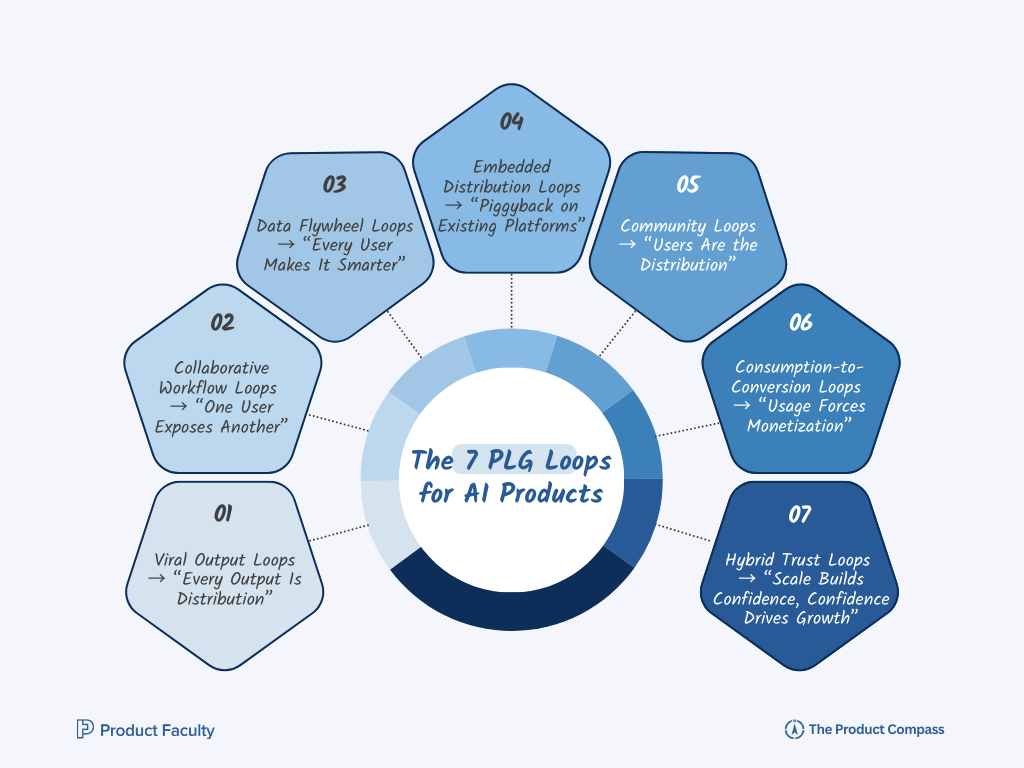

II. Layer 2: The 7 PLG Loops for AI Products

In SaaS, PLG usually meant free trials, referral bonuses, or the classic “invite your team” mechanic. But AI changes the game. Because every query, every output, every workflow has the potential to generate more distribution if it’s designed as a loop.

Here’s the shift: in AI, PLG isn’t just about virality, it’s about compounding adoption.

A single user generating value should create visibility, data, or incentives that pull the next user in, without marketing spend. Done right, your product doesn’t just retain; it recruits, educates, and sells itself.

Over the last few years, working with AI founders and product leaders, I’ve found seven distinct loops that consistently drive this kind of compounding growth. Each one is different, but all share the same DNA: usage → creates value → attracts new usage → strengthens the moat.

Let’s break them down with examples and a playbook you can follow:

1. Viral Output Loops → “Every Output Is Distribution”

In SaaS, virality was about users sending invites. In AI, virality lives in the outputs. Because outputs are artifacts — images, summaries, videos, answers — they naturally travel across ecosystems. If you design outputs to carry your brand, every piece of usage becomes a distribution node.

Examples:

Midjourney: Early on, every image generated in Discord carried not just beauty but metadata: prompts, channels, credits. Users didn’t just share art; they shared the Midjourney experience. Every screenshot was free marketing.

Perplexity: By surfacing citations alongside answers, they created a natural backlink loop. Bloggers, students, and analysts quoting Perplexity answers inevitably linked back to the engine.

Runway: AI-generated video edits became viral clips on TikTok, each one a showcase of Runway’s creative tools, not just the user’s skills.

Design Playbook

Bake in Branding → watermarks, citations, or subtle signature styles. The goal isn’t intrusive ads but recognizable provenance.

Default to Shareability → outputs should be one-click sharable across Slack, Twitter, LinkedIn. Don’t bury it in the “Export” option.

Make Remixing Easy → let recipients tweak the artifact (edit prompt, adjust output). Each remix is a new distribution node.

Turn Outputs Into Funnels → every artifact links back to the origin (“Made with X”).

Hidden Pitfalls

Poor-quality outputs kill the loop. One bad hallucination screenshot shared online damages trust more than 10 good ones.

If outputs look “generic GPT,” they won’t carry brand recall. The loop dies if users can’t distinguish you from others.

2. Collaborative Workflow Loops → “One User Exposes Another”

The strongest loops happen where work is shared. In AI, embedding into collaboration means one person’s use reveals AI’s value to others automatically. Adoption spreads laterally inside teams without marketing.

Great examples:

Figma AI: When one designer uses auto-layout or AI copy tweaks, teammates inside the same file see it happen. Curiosity → trial → adoption.

Notion AI: A doc summarized by AI doesn’t just help the author, it helps every collaborator who opens the doc. They experience AI benefits without ever clicking a button.

GrammarlyGO: In a marketing team, if one person’s emails adopt AI-enhanced clarity, managers and peers pressure others to adopt for consistency.

Design Playbook

Target Shared Surfaces → docs, boards, repos, tickets. Places where visibility is inherent.

Expose AI Actions Transparently → “This section was AI-summarized.” Curiosity is your invite mechanic.

Seed the First Use Case → AI adoption spreads faster when framed as “helping the team” (better notes, faster drafts) than as “helping you personally.”

Make Switching Costs Team-Wide → once some adopt, workflows get locked in (e.g., formatting, styles, templates).

Advanced Moves

Cross-Team Loops → design so adoption in one team forces adoption in adjacent ones (e.g., AI reporting in finance spreads to ops → exec dashboards).

Usage Nudges → highlight in-product when a teammate used AI successfully (“X summarized this doc with AI in 2 mins”). Subtle social proof drives curiosity.

3. Data Flywheel Loops → “Every User Makes It Smarter”

The holy grail: each user’s action strengthens the product itself. Unlike viral outputs or workflow exposure, this loop compounds defensibility. The product doesn’t just spread, it gets harder to copy.

Case Studies

Duolingo: Every student mistake became structured learning data, refining the tutor model. Over time, this moat became insurmountable.

GitHub Copilot: Every accepted or rejected code suggestion created a feedback signal. Millions of such micro-signals tuned Copilot to developer norms.

Harvey: Every lawyer’s contract edit created supervised training data for future M&A reviews.

Design Playbook

Instrument Feedback Loops → capture signals like accept/reject, edit/no-edit, completion rates. These are gold.

Make Feedback Invisible → don’t ask users to label data; design workflows where natural behavior is the feedback.

Reward Corrections → acknowledge when user fixes improve the model. Makes them feel like co-builders.

Aggregate Into Moats → structured data → better models → better UX → more users → more data.

Hidden Pitfalls

Privacy & Consent → mishandle sensitive data, and you break trust permanently.

Data Pollution → without quality filters, bad signals amplify model errors at scale.

Advanced Moves

Cross-User Generalization → Copilot’s breakthrough wasn’t one user’s edits; it was learning patterns across millions.

Vertical Moats → Harvey leveraged high-value vertical (law) where corrections are expensive to replicate.

4. Embedded Distribution Loops → “Piggyback on Existing Platforms”

Instead of building new habits, insert yourself into old ones. This loop compounds by riding platforms that already own distribution.

Case Studies

Notion AI: Leveraged Notion’s 20M+ users by flipping a switch → distribution overnight.

SlackGPT: Injected AI into daily communication. No new app, no new habit, just augmentation.

Adobe Firefly: Embedding into Creative Cloud gave Firefly a privileged surface — millions of creatives encountered it by default.

Design Playbook

Find Daily Surfaces → where do users already spend 3+ hours/day? That’s your wedge for embedding.

Make AI Invisible → augment workflows subtly, not disruptively.

Bundle With Existing Plans → upsell existing customers rather than chasing new ones.

Leverage Distribution Power → partner with platforms where embedding = instant scale.

5. Community Loops → “Users Are the Distribution”

The product itself becomes a collective stage. Adoption compounds because users don’t just use it individually; they create visibility for others.

Design Playbook

Create Public Surfaces → a gallery, a leaderboard, a hub where outputs are discoverable.

Reward Contributions → badges, exposure, remixability.

Make Learning Social → design so users learn faster together than alone.

Curate Quality → community loops die in spam unless you gate or moderate.

6. Consumption-to-Conversion Loops → “Usage Forces Monetization”

Adoption compels upgrade because free usage is capped. Unlike SaaS free trials, this loop works because AI’s costs scale directly with usage.

Case Studies

ChatGPT: GPT-3.5 for free; GPT-4 gated. Users who tasted quality naturally upgraded.

Midjourney: Free GPU minutes hooked users, but heavy creators hit walls fast → conversion.

Canva AI: Free credits drove experimentation, but serious designers upgraded once limits hit.

Design Playbook

Give Enough to Hook → the first taste must prove value.

Align Paywall With Value → block usage at the exact point of “aha!” not before.

Tier Thoughtfully → light users stay free; power users self-select into paid tiers.

Communicate Costs Honestly → frame limits as resource fairness, not greed.

Hidden Pitfalls

Over-Stingy Free Tier → users never experience value, churn early.

Over-Generous Free Tier → viral adoption bleeds cash.

Advanced Moves

Upgrade Nudges → personalize paywalls: “You’ve saved 12 hours this week. Unlock unlimited AI help for $20.”

Credits as Currency → turn usage caps into a gamified resource (such as tokens or GPU minutes).

7. Hybrid Trust Loops → “Scale Builds Confidence, Confidence Drives Growth”

Unlike SaaS, where scale = reliability, in AI scale = suspicion (hallucinations, bias). But if you design trust loops, more adoption → stronger trust → more adoption.

Case Studies

Perplexity: Citing sources didn’t just build trust; it made outputs inherently defensible.

Anthropic: Positioned as “safety-first” → enterprises amplified adoption because every new client improved Anthropic’s reputation.

Grammarly: Accuracy improved with scale, and trust snowballed as “it just works.”

Design Playbook

Instrument Reliability → publish metrics on accuracy, latency, uptime.

Surface Transparency → citations, confidence scores, model disclaimers.

Reward Safe Use → highlight when users choose safe/transparent outputs.

Narrate Trust Publicly → make safety part of your market story.

Hidden Pitfalls

One Big Failure = Collapse → CNET’s AI-generated finance articles scandal destroyed credibility in weeks.

Overpromising → if you frame AI as flawless, any error kills you.

Advanced Moves

Trust-as-a-Moat → enterprises don’t buy “best model”; they buy “most trustworthy.”

Compound via Scale → the bigger your customer base, the stronger your trust positioning (“10M users rely on this safely”).

III. Layer 3: The Three Defensible Moats in AI Distribution

Over the years, I’ve seen dozens of supposed “moats” pitched: UX polish, brand, partnerships, even “better prompts.” Most don’t hold.

When you strip away the noise, there are really only three moats that compound distribution in AI:

Moat 1: Data Moat Playbook

In AI, models are rented, but data is owned. Everyone can call GPT-5 tomorrow, but not everyone can train it on the unique traces, interactions, and signals generated by your users. A data moat makes every query, click, or correction an investment into your defensibility.

How to build it:

Instrument every interaction from day one. Don’t wait until you have thousands of users. From your first beta, log every prompt, correction, acceptance, rejection, or outcome. These aren’t “analytics,” they’re the raw material of your moat. Example: GitHub Copilot doesn’t just count completions; it measures when developers accept vs. edit suggestions.

Design for structured signals, not noise. Raw outputs aren’t useful. You need structured data pipelines: labeled feedback, user corrections, error states. Build scaffolding that converts stochastic outputs into clean, reusable training signals.

Example: Grammarly forces outputs into structured suggestions (tone, clarity, correctness), which creates usable labels at scale.Create feedback loops that improve product quality. Close the loop so that new data isn’t just stored, it actively improves the product. This makes users feel the benefit of their own interactions, which increases willingness to contribute more signals. Example: Replit’s Ghostwriter improves code suggestions with community corrections, creating a virtuous cycle.

Prioritize data you can own. Don’t try to collect everything. Focus on data competitors cannot get: proprietary workflows, domain-specific corrections, contextual traces. Public web data is worthless for defensibility.

Moat 2: Workflow Moat → The Expansion Ladder

If you become the default operating system for a job to be done, you don’t just own adoption, you own retention. Users stop thinking of you as a tool and start thinking of you as the place where work happens. AI becomes invisible, and leaving becomes unthinkable.

How to build it:

Map the full workflow, not just the feature. Don’t stop at the AI novelty. Understand the end-to-end process your user is trying to accomplish. Where does the workflow begin? Where does it end? Anchor yourself in that flow. Example: Slack didn’t wedge into “chat.” It became the system of record for team communication, the place where work starts and ends.

Insert AI into the highest-friction points. Pick the step in the workflow where the pain is most acute and frequent. Make AI invisible there. If you can remove 30% of the pain from one step, you can expand to others later.

Integrate natively with existing tools. Meet users where they already live. Build integrations so that switching between tools feels seamless, but slowly shift gravity toward your product as the hub. Example: Notion AI didn’t ask users to “try a new AI notes app.” It simply appeared inside the doc where they were already working.

Expand sideways once anchored. Once you own one high-friction step, expand into adjacent steps. This is how you turn a wedge into an OS. Example: Figma AI started with auto-layout tweaks, then expanded into text, mockups, and prototyping.

Here’s your checklist:

Are you solving a step users do daily, not monthly?

Do you reduce friction without changing habits?

Can you expand laterally into adjacent steps?

Moat 3: Trust Moat Playbook

In AI, hallucinations, bias, and privacy risks erode adoption faster than poor UX. Trust is not a “soft” moat, it’s often the deciding factor for enterprise buyers and the stickiest retention driver for consumers. If people don’t trust your AI, they won’t depend on it. If they do trust it, they’ll forgive imperfections and embed you into mission-critical workflows.

How to build it:

Design guardrails into the product, not as patches. Don’t wait until after launch to think about safety. Bake in transparency (citations, confidence scores, guardrails) from the first version. Example: Perplexity won trust not because answers were perfect, but because citations made the limitations visible.

Communicate uncertainty, don’t hide it. Users trust honesty more than false confidence. Expose confidence levels, flag limitations, and surface alternative answers when appropriate.

Align with compliance and governance early. Enterprises care less about features and more about risk. Build audit logs, data controls, and privacy guarantees into your core infra, not as optional add-ons. Example: Anthropic’s “Constitutional AI” positioning made trust their brand moat, winning enterprise customers despite smaller scale.

Turn trust into social proof. Every successful deployment should become a story: case studies, testimonials, certifications. Trust doesn’t just retain, it attracts. Example: Harvey used credibility with a few elite law firms to onboard many more.

But you must watch out for forever-promising accuracy and treating enterprise compliance as “later.” By the time you try, competitors who prioritized it will already be embedded.

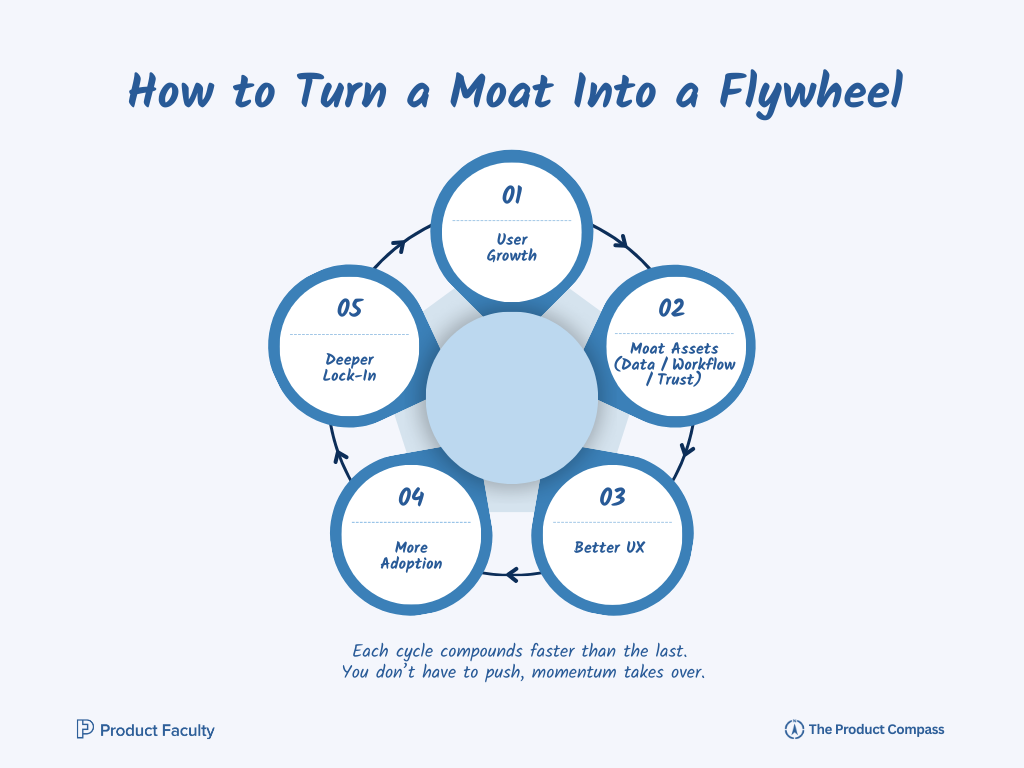

Moat Flywheel Playbook → The Compounding Loop

A moat by itself protects you. A moat turned into a flywheel grows you. The key is designing feedback loops where every new user strengthens the moat, which then makes the product better, which then attracts more users.

The Moat Equation: User Growth → Moat Assets (Data / Workflow / Trust) → Better UX → More Adoption → Deeper Lock-In

More users = more feedback signals (data moat).

More usage = deeper reliance on your platform (workflow moat).

More deployments = stronger proof of reliability (trust moat).

All three together = distribution that compounds.

In simple steps:

Start: User growth is the ignition point; without new users, the loop never spins. Early adoption gives you the raw fuel.

Generate: Each user interaction should create moat assets, whether structured data, deeper workflow reliance, or trust signals. The quality of this generation step defines the loop’s strength.

Improve: Feed those assets back into the system so UX visibly improves. Users must see the product get smarter, faster, or safer over time.

Retain: Better experiences drive retention, which strengthens your position as the default choice. Retention is what converts growth into compounding value.

Attract: Retained users attract new ones through sharing, referrals, or visible proof of value. This is where growth becomes self-reinforcing.

Spin: Over time, each cycle compounds faster than the last. A true flywheel is one you don’t have to push, momentum takes over.

The test of a flywheel: if growth stops tomorrow, does your moat still get stronger? If yes, you’ve built a compounding system.

IV. 6 Laws of AI Distribution Based on 6 Case Studies

Case Study 1: Perplexity → Retrieval as a Distribution Wedge

Most people look at Perplexity and see a product innovation: “they added retrieval to LLMs.” That’s the wrong frame. Retrieval wasn’t just about improving answers, it was a distribution bet. By surfacing citations, sources, and verifiable snippets, Perplexity made its outputs indexable and shareable on the open web. Instead of being trapped inside a closed chat interface like most AI tools, its content became crawlable, searchable, and linkable.

This meant two things: first, they piggybacked on SEO: Google search traffic could point into Perplexity results because answers had URLs, references, and persistent structures. Second, they built trust that wasn’t just UX, but a viral distribution mechanism. When a user shares a Perplexity answer, the citations make it credible to others. Every shared answer is a mini-ad that reinforces the brand as “the AI you can trust.”

Law 1: Technical scaffolding choices can be distribution wedges. Choosing retrieval wasn’t just accuracy engineering, it was a deliberate move to turn outputs into distribution channels. Most PMs would frame this as an ML trade-off. The deeper view is that architecture shapes discoverability.

Case Study 2: Runway → Betting on Professionals, Not Consumers

At first glance, video generation seems like a mass-consumer play. The obvious strategy would be “be the TikTok of AI video”: go broad, chase virality, and hope casual creators drive adoption. Runway went the opposite direction. They doubled down on professional creators: editors, filmmakers, and designers with high-stakes production needs.

That choice narrowed their audience but massively improved their distribution quality. Professionals don’t just consume tools; they institutionalize them. If a Hollywood studio or agency adopts Runway, the product doesn’t spread one user at a time, it gets embedded into entire production workflows, contracts, and budgets. One studio win can be worth 10,000 hobbyists.

Runway’s distribution wasn’t about “growth hacking” users. It was about concentrating on a segment where adoption creates structural lock-in: training programs, industry standards, and word-of-mouth inside professional networks. By targeting pros, they didn’t just build credibility, they made distribution compound without paid marketing spend.

Law 2: Sometimes shrinking your market expands your defensibility. Consumer growth is noisy and shallow. Professional growth is slower but far harder to dislodge.

Case Study 3: GitHub Copilot → Piggybacking on IDEs as Distribution

If you describe Copilot as “AI code generation,” you’re missing the distribution genius. The real move wasn’t just shipping an AI assistant, it was embedding directly inside the IDEs (VS Code, JetBrains, etc.) where developers already live 8–10 hours a day.

Instead of asking developers to open a separate app, learn a new workflow, or adopt another SaaS dashboard, GitHub piggybacked on the one environment devs cannot avoid. The wedge wasn’t code generation; it was location. The IDE became the distribution channel.

This is why adoption spread so quickly: not because AI code gen was novel (many startups launched similar tools), but because Copilot rode the rails of existing developer distribution. It collapsed onboarding friction to zero.

Law 3: The most powerful distribution channel is the one where your user already spends their entire day. Don’t make them come to you, go to them.

Case Study 4: Anthropic → Trust as a Distribution Channel

Anthropic didn’t outcompete on raw scale, model size, or speed. Their contrarian bet was to make “safety” not just an internal philosophy but their entire distribution wedge. Positioning themselves as the “safety-first” AI company reframed them from being “another model provider” to being the only credible choice for enterprises in regulated industries.

Trust became the vector that opened doors: procurement approvals, legal sign-offs, and enterprise deals that would never clear with vendors who didn’t foreground safety.

What looked like a slower, cautious approach was in fact a distribution strategy. Instead of competing on features or models, Anthropic built its go-to-market on risk-mitigation. In industries where adoption is blocked not by lack of demand but by fear, this turned “trust” into the growth engine.

Law 4: Your positioning can itself be a distribution channel. In markets with regulatory or reputational barriers, trust accelerates adoption faster than features ever could.

Case Study 5: Clay → Relationship Graph as Distribution

Clay didn’t launch as “another AI CRM.” Instead, they reframed their wedge as relationship intelligence. By syncing data across email, calendars, LinkedIn, and more, they built a constantly updating graph of your relationships. The genius wasn’t the AI summaries; it was that Clay turned passive contacts into an active, living asset.

This design became its distribution engine. Every time a user enriched their graph, they pulled in more connections, more context, and more reasons to stay. In B2B, where introductions and referrals drive growth, Clay’s users effectively became its distribution muscle. Every time they shared a contact or insight, the product advertised itself. They’re also nailing their influencer program.

Law 5: Distribution can come from data exhaust. If every new interaction a user makes creates a shareable, viral asset, your product grows without formal GTM spend.

Case Study 6: Cluely → Community-Driven AI Workflows

Cluely, built by Roy, is the best case study of weaponizing virality as distribution in AI. Unlike Clay, which leaned into professional credibility, Cluely went the other direction: rage marketing.

Instead of polished launches, they engineered controversy. Cluely’s distribution was TikTok, Instagram, and Twitter-native, tens of thousands of micro-accounts pumping viral clips of the tool in action. Many of these weren’t ads, they were “cheat content”: students, workers, and creators showing how Cluely let them bypass effort. The more people criticized it as “cheating,” the more awareness spread. Now, they’re expanding into more user workflows and angles with their marketing.

Law 6: Virality doesn’t always come from delight. Sometimes distribution grows faster when it taps into rage, controversy, and cultural flashpoints. Cluely didn’t avoid being called a “cheating app.” It scaled because of it.

In a nutshell…

Distribution in AI is not “launch campaigns + PLG loops.” It’s the architecture, segment choice, integration depth, positioning narrative, community engineering, and cultural triggers that turn a feature into a movement.

A PM or product leader should run their product through all six laws before launch:

If your outputs aren’t shareable → you miss compounding growth.

If you’re chasing consumers without pros → you miss institutional scale.

If you’re outside workflows → you fight adoption friction.

If your positioning doesn’t remove fear → you stall at procurement.

If you don’t engineer GTM → you rely on luck.

If you avoid controversy → you miss cultural virality.

Winners won’t master all six at once, but they’ll deliberately choose 1–2 to dominate and design the rest to reinforce them.

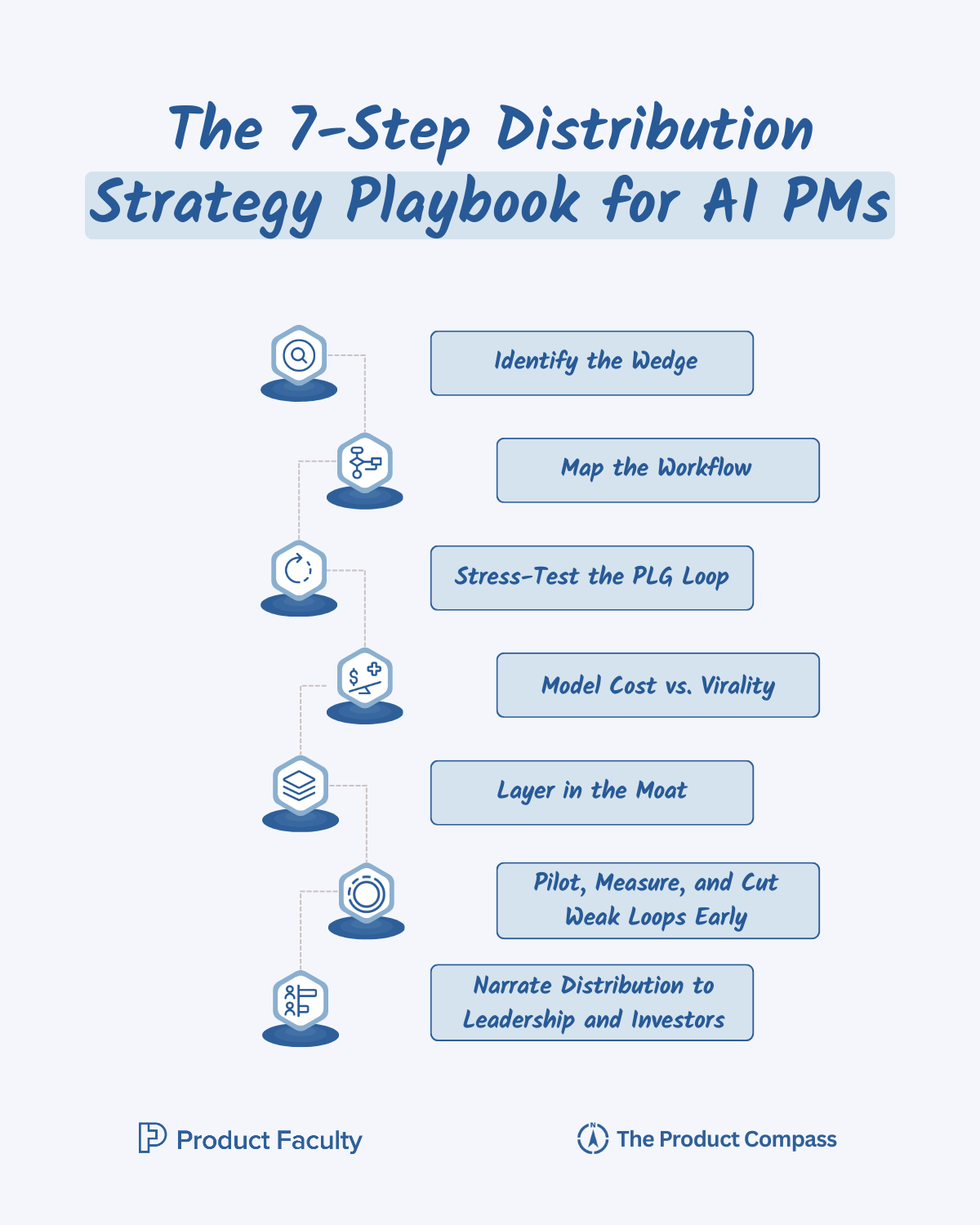

V. The 7-Step Distribution Strategy Playbook for AI PMs

Distribution has to be designed into the product from day one, because costs, virality, trust, and workflows are all interdependent.

Here’s a practical 7-step playbook you can run inside your org tomorrow:

Step 1: Identify the Wedge

Your wedge is not your product vision, and it’s definitely not your feature set. It’s the specific, painful, defensible entry point that forces the door open. Think of it as the scalpel, not the sledgehammer.

Ask yourself: Where do users feel the most frequent, hated pain in their workflow? Then ask: Can we deliver relief in under 30 seconds? If yes, that’s your wedge. If not, it’s not sharp enough.

The key is defensibility: if your wedge can be cloned by OpenAI or a weekend hacker, it’s not a wedge. It’s a demo.

A wedge must live in a unique context: a workflow step, a compliance bottleneck, or a cultural dynamic that competitors can’t instantly replicate.

Step 2: Map the Workflow

Once you’ve found the wedge, zoom out and draw the full user journey. Step by step, how does a user currently accomplish the task your product touches?

Your goal here is to find the piggyback points: the exact tools, habits, or platforms where your product can embed itself invisibly. Instead of asking users to form new behaviors, your job is to hijack existing ones.

Practical step: literally whiteboard the workflow and mark every integration surface (IDEs, CRMs, docs, emails, Slack, etc.). Your wedge should fit into one of those surfaces like a puzzle piece. If it requires inventing a new behavior, adoption friction will kill it.

Step 3: Stress-Test the PLG Loop

PLG (product-led growth) is not magic. Most PMs confuse “users like it” with “distribution loop exists.” The real test: Does usage naturally create more users?

Ask three questions:

Does one user’s output get shared with others by default?

Does every new account pull in data or contacts that attract more accounts?

Does using the product create artifacts (reports, invites, templates) that act as distribution nodes?

Step 4: Model Cost vs. Virality

Here’s the trap: virality without economics destroys AI products. Every viral loop burns inference costs, and if margins collapse, growth becomes a liability.

You need to model the cost curve before scaling. For each loop, calculate: average usage per user × inference cost = burn per user. Then run that at 10x scale. If you can’t sustain it, you don’t have a loop, you have a time bomb.

The distribution play isn’t just “how do we grow fastest?” but “how do we grow without dying?” Virality is only valuable if the economics bend in your favor.

Step 5: Layer in the Moat

Distribution without defensibility is leaky. That’s why your wedge and loops must eventually tie into a moat: data, workflow, or trust.

Data moat: does usage create unique, structured data that compounds over time?

Workflow moat: do you become the default OS of a workflow, making replacement painful?

Trust moat: do you remove risk (compliance, accuracy, governance) that competitors can’t?

If your loops grow but don’t compound into one of these moats, competitors will clone you and steal your distribution. Moats convert distribution into permanence.

Step 6: Pilot, Measure, and Cut Weak Loops Early

The biggest mistake PMs make is treating every growth experiment as sacred. In AI, weak loops bleed money faster than in SaaS. That’s why you need to pilot small, measure aggressively, and kill ruthlessly.

Set a two-week experiment cadence: test a wedge or loop with a small subset of users, measure activation, virality, and cost, then make a call. Don’t let “zombie loops” linger. Every week they stay alive, they drain attention and budget.

Healthy loops show exponential signals even in pilots — invitations, shares, usage expansion. If you’re rationalizing weak signals (“maybe it’ll work at scale”), you’re already behind.

Step 7: Narrate Distribution to Leadership and Investors

Finally, you have to sell distribution as a moat, not a feature. Most execs and investors still think in terms of features: “cool AI summary,” “nice chatbot.” Your job is to reframe the conversation: distribution is the product.

When narrating, emphasize:

Moat: “Every new user creates proprietary data that compounds.”

Economics: “Our cost per query goes down as adoption grows.”

Loops: “Every output is an invite, every invite is a growth node.”

You’re not asking for resources to “market a feature.” You’re showing that distribution is the engine that makes the company defensible, fundable, and scalable.

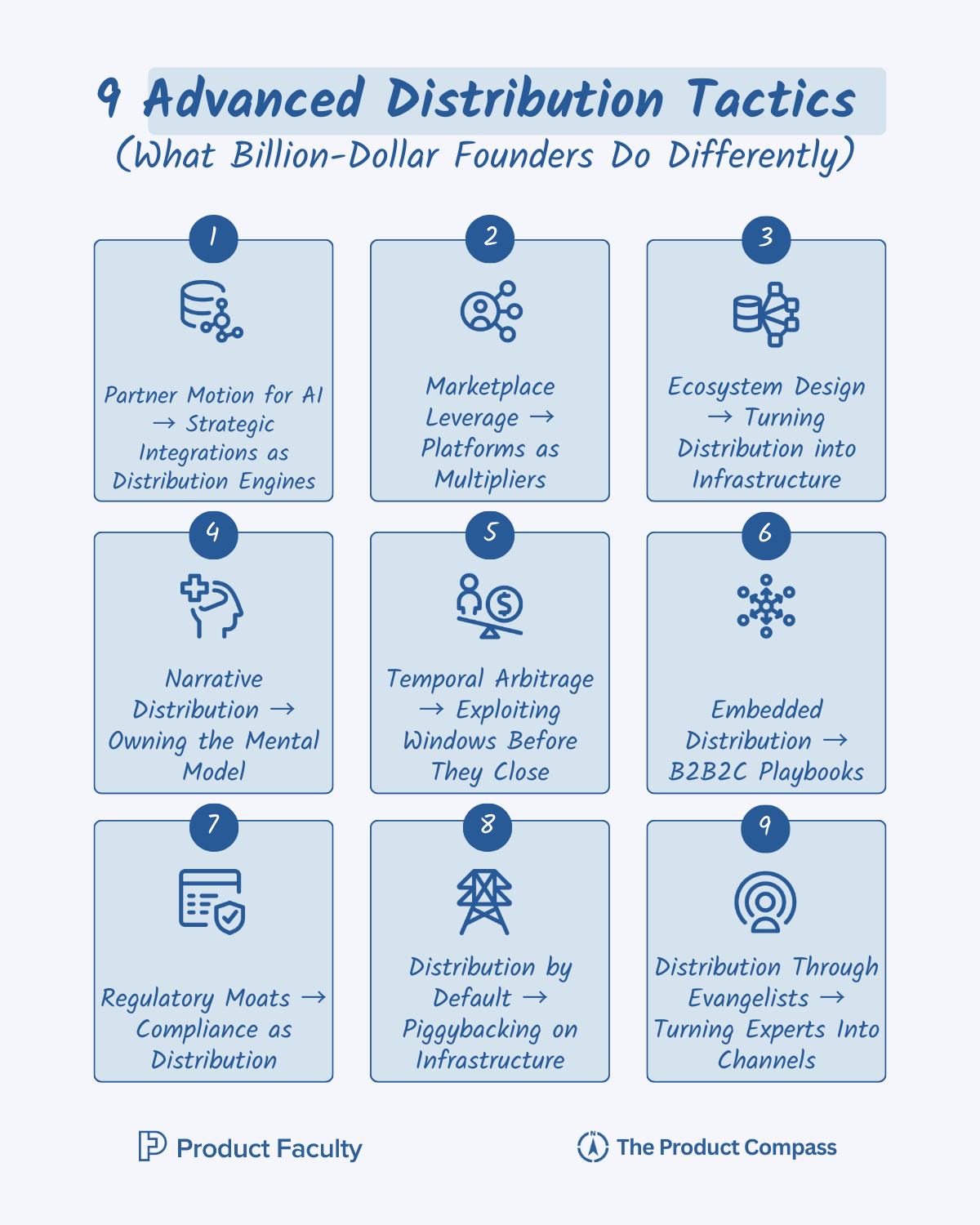

VI. 9 Advanced Distribution Tactics (What Billion-Dollar Founders Do Differently)

Most PMs stop at PLG loops and ads. But billion-dollar founders know that distribution isn’t just about channels; it’s about architecting leverage. They don’t ask, “How do we get more users?” They ask, “How do we design systems where growth compounds without us pushing?”

Here are 9 advanced distribution tactics I’ve seen consistently separate breakout AI companies from everyone else (with principles + playbook).

1. Partner Motion for AI → Strategic Integrations as Distribution Engines

In SaaS, integrations are often treated as features. In AI, they’re distribution multipliers: A strategic partner motion means embedding into ecosystems where your wedge instantly scales.

Principles:

Go where trust already exists: enterprise partners, incumbents, infra players.

Use integrations as distribution, not just functionality (Slack app → Slack marketplace visibility).

Prioritize asymmetric value: partners must feel you make their product more valuable.

Playbook:

Map the platforms your users spend time in (CRMs, IDEs, productivity suites).

Rank by distribution surface (marketplaces, email blasts, API ecosystems).

Build one flagship integration that partners want to promote.

Turn co-marketing into systemized loops (webinars, marketplace features, joint PR).

Measure adoption via the partner channel, not just usage.

2. Marketplace Leverage → Platforms as Multipliers

Marketplaces aren’t just distribution channels (they’re pre-built demand engines). Salesforce AppExchange, Slack App Directory, Shopify App Store all are ecosystems where your listing = organic discovery.

Principles:

Marketplaces are SEO for workflows. You’re not fighting for attention; you’re slotting into intent.

Visibility compounds: higher installs = higher ranking = more installs.

Reviews, ratings, and templates are themselves distribution assets.

Playbook:

Treat your marketplace listing like a landing page. Copy, screenshots, onboarding flows must be conversion-engineered.

Seed reviews early with real users. Social proof drives ranking.

Build “template packs” or “use cases” that increase surface area within the marketplace.

Monitor competitors weekly. Marketplaces are fast-moving, winners actively defend their rankings.

3. Ecosystem Design → Turning Distribution into Infrastructure

The real billion-dollar distribution play isn’t acquisition. It’s ecosystem design. Instead of pulling users in one by one, you create infrastructure others build on.

Principles:

Your API, SDK, or dataset becomes the foundation for others’ products.

Ecosystem growth = your growth.

Moat shifts from “number of users” to “amount of dependency.”

Playbook:

Open APIs early, but throttle with tiers (free → paid).

Build evangelist programs for developers, consultants, and agencies.

Invest in documentation as a growth engine — clarity scales adoption.

Incentivize ecosystem creation (affiliate revenue, marketplace, data access).

Track “ecosystem GDP”: the number of products, workflows, or dollars created on top of you.

4. Narrative Distribution → Owning the Mental Model

Sometimes distribution is pure story. “Copilot for X.” “Operating System for Y.” “The Canva of Z.” These frames aren’t just clever taglines; they are distribution hacks that compress your GTM motion.

Principles:

People buy categories they understand faster than they buy categories they have to decode.

A crisp narrative reduces sales cycles, investor skepticism, and user confusion.

The best narratives travel: press, influencers, analysts spread them for free.

Playbook:

Write the one-line category claim before you launch a feature.

Anchor yourself against a giant (Copilot, OS, AWS). This borrows distribution credibility.

Stress-test internally: can every PM, engineer, and sales rep repeat the same frame?

Use narrative as gating: if the tagline doesn’t immediately clarify your wedge + moat, don’t ship.

5. Temporal Arbitrage → Exploiting Windows Before They Close

AI markets move in compressed timeframes. What used to be a 5-year moat in SaaS is now a 5-month wedge in AI. The winners identify distribution channels or hacks that work for a short time and milk them before the window collapses.

Playbook:

Watch for algorithm shifts (e.g., TikTok suddenly promoting educational content).

Move fast to dominate before the channel saturates.

Have “channel kill-switches”: be ready to pivot when costs spike or virality drops.

Teach your org: distribution isn’t static, it’s opportunistic.

6. Embedded Distribution → B2B2C Playbooks

Instead of selling directly to end users, you embed your AI into another company’s product, letting them carry you into thousands of accounts.

Playbook:

Identify adjacent SaaS tools where your feature could be white-labeled.

Negotiate a revenue share or “powered by” branding (distribution at zero CAC).

Make integration dead-simple (API, SDK, plug-and-play).

Prioritize partners with customer overlap but non-competing positioning.

7. Regulatory Moats → Compliance as Distribution

Most founders treat regulation as a blocker. The savviest founders treat it as a wedge. If you’re the only AI tool that passes a compliance threshold, you instantly unlock markets competitors can’t touch.

Playbook:

Pick one regulated vertical (finance, healthcare, defense).

Invest early in audits, certifications, and compliance workflows.

Make compliance visible in your marketing (“SOC2-ready,” “HIPAA-aligned”).

Use compliance to strike enterprise deals competitors can’t even bid on.

8. Distribution by Default → Piggybacking on Infrastructure

This is the rarest but most powerful tactic: make your AI the default option inside an infrastructure layer (cloud, app store, hardware). Once you’re bundled, distribution becomes automatic.

Playbook:

Target infra players who need AI to remain competitive (cloud vendors, browser makers).

Offer your tech as a “default setting”—cheap or free to them, but sticky for you.

Negotiate placement in onboarding flows or menus (think “pre-installed”).

Optimize for volume over margin: defensibility comes from being unremovable.

9. Distribution Through Evangelists → Turning Experts Into Channels

This is not influencer marketing in the consumer sense, but expert evangelism in domains where trust is scarce.

Playbook:

Recruit 10–50 respected practitioners (lawyers, designers, engineers).

Give them privileged access, equity, or revenue share.

Encourage them to teach, publish, and showcase real use cases with your product.

Track “downstream adoption”: every evangelist should pull in dozens of accounts.

VII. Conclusion: The Distribution Mindset Shift

Here’s the uncomfortable truth:

In AI, features don’t last.

Every summarizer, assistant, or copilot you launch can be copied in hours, built into ChatGPT in the next update, and forgotten within a year.

The only thing that really lasts is how your product spreads.

Distribution is no longer just a “go-to-market” job. It’s the core strategy of survival.

A wedge that solves such a painful problem it becomes the obvious way in.

A loop where every use brings in the next user—without ads.

A moat that gets stronger as more people use your product.

A story so clear that customers and investors repeat it for you.

That’s the mindset shift for AI PMs and product leaders:

Stop asking “What can AI do?”

Start asking “How will AI spread in a way no competitor can copy?”

Because in this wave, it’s not the smartest model that wins.

It’s not the flashiest feature that wins.

It’s the company that spreads faster, deeper, and more defensibly than the rest.

That’s the essence of AI distribution.

And it’s the difference between a wrapper that dies in 12 months and a company that shapes the next decade!

See you next week!