A Guide to Evaluating LLM-Powered for Product Managers

When Meta released Galactica in 2022, the initial buzz was electric. Here was an advanced language model promising to revolutionize scientific research. Yet, within three days, Meta pulled the demo. Galactica was producing wildly inaccurate and even offensive outputs, and it all went downhill fast. Around the same time, Anthropic discovered that tiny, almost laughable changes—like switching “(A)” to “1.” in a multiple-choice prompt—could alter a model’s accuracy by five percent. If you’re a product leader navigating the AI landscape, these stories are a blunt reminder that robust evaluation isn’t a “nice-to-have.” It’s the guardrail preventing your LLM-powered feature from going off the rails and alienating users the moment it meets the real world.

That’s what this guide is about: how to evaluate large language models in a way that measures success accurately, spots failures early, and keeps your product on the right course. Think of it as a crash course on balancing flashy AI capabilities with the real-world demands of your users. And by “real-world,” I mean the messy, unpredictable interactions that simply don’t show up in neat academic benchmarks.

Why Evaluations Matter So Much

If you’ve ever worked on a product feature without proper QA, you know that “flying blind” feeling. When dealing with LLMs, it’s even riskier because these models produce open-ended text that can sound eerily confident even when it’s plain wrong. Formal evaluations help keep you honest. They tell you if the chatbot you’re building actually answers customer questions or if your text generator quietly slips in made-up claims. Evaluations also highlight bias or toxicity before your users experience it firsthand. In short, thorough evaluation ensures you’re not shipping an AI that simply feels polished but is actually riddled with subtle (or glaring) flaws.

An added complexity is the unpredictability of language models themselves. Small changes in prompts can completely alter outputs, which might surprise any product manager who’s accustomed to stable, deterministic software. But LLMs are more like interesting conversation partners: they can behave differently depending on your tone or phrasing. Without structured testing, you could easily miss that the model is fabulous on standard queries but helpless on corner cases—or that it falls apart when asked about specialized topics, like scientific data or financial regulations.

The Core Challenge: LLMs Are Hard to Judge

You’ve probably seen standard metrics like BLEU or ROUGE thrown around in AI circles. They’re fast to compute and handy for early benchmarking, yet they don’t always reflect how humans judge an answer’s correctness or helpfulness. A model might score high on ROUGE for summarizing a news article, but produce unwieldy, redundant paragraphs that leave readers frustrated. That’s why human evaluation is so critical. Getting actual people to rate outputs—either through quick side-by-side comparisons or longer user studies—captures nuances that automated metrics miss.

On top of that, most LLM-driven features demand customized checks. A system for medical advice needs robust “hallucination detection,” where you test how often the model confidently generates incorrect medical details. A tool for financial analysis might need thorough bias probes to ensure it’s not skewing results based on certain demographics. And every open-ended model should face “red-teaming,” where you deliberately push it with provocative or extreme prompts to see if it spits out unsafe or abusive content. The big, underlying truth is that each product context has its own potential pitfalls. If you don’t plan for them, you’ll only discover these gaps when it’s too late—usually on launch day, or worse, in a PR nightmare a week later.

An Effective Framework for LLM Evaluation

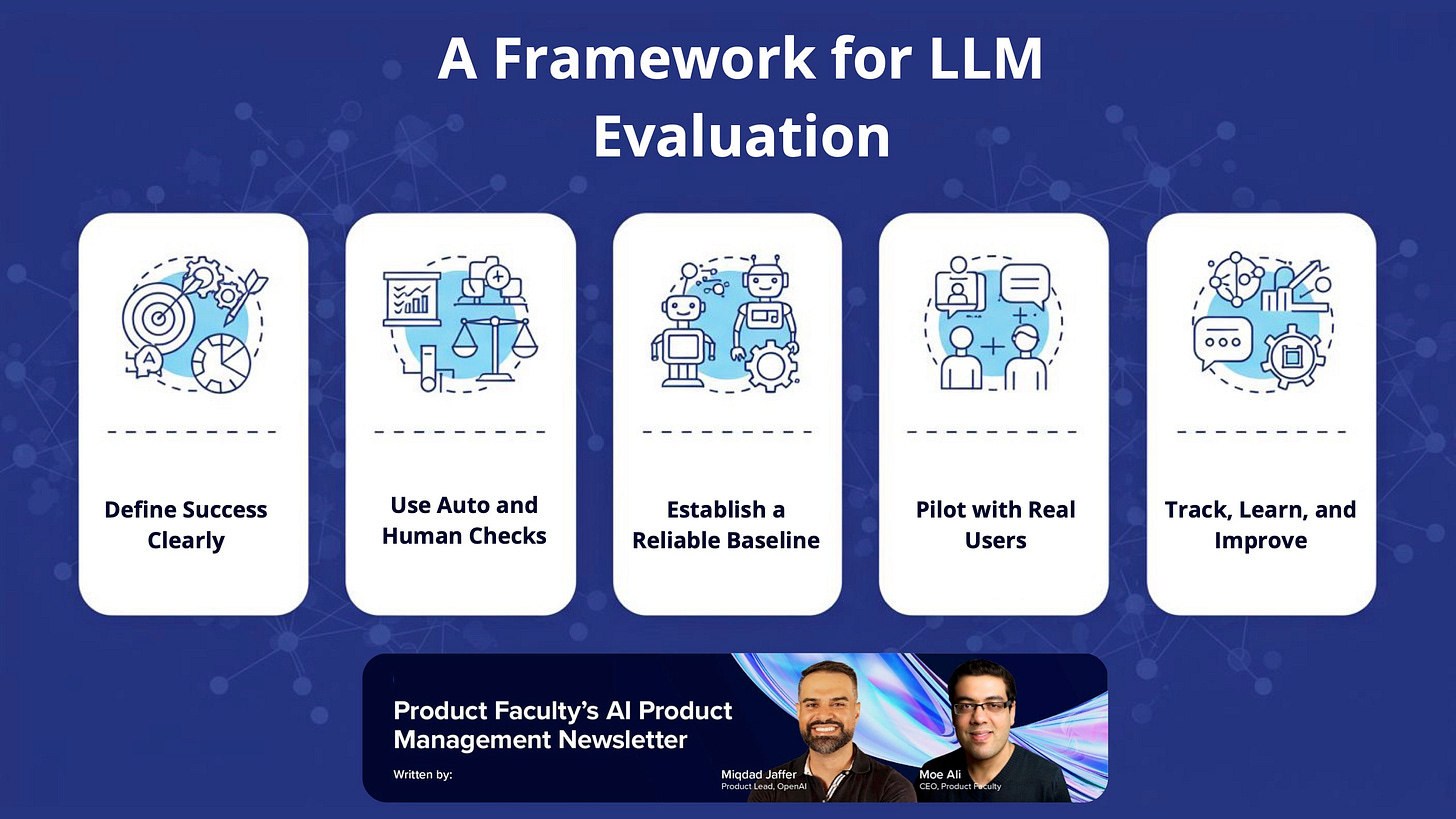

One way to keep your product safe is to follow a structured process that combines automation, human feedback, and careful monitoring. Here’s a straightforward approach that can reveal issues before they become disasters:

Define Success Clearly

Spell out exactly what “good” looks like. For a customer-support chatbot, success might be resolving 80% of issues without human escalation, while maintaining a user satisfaction rating of 4 out of 5. A code assistant’s key metric might revolve around how frequently the generated code runs without errors. Pinpointing these objectives up front keeps your evaluation focused.Use Both Automated Metrics and Human Checks

Automated metrics—like BERTScore—are great for quick iteration because they give you a numeric view of progress. But human raters are equally essential. They can spot subtle flaws, like factual errors that pass a basic overlap test or a patronizing tone that alienates users. Balancing both approaches ensures you’re not chasing a metric that doesn’t align with the user experience.Establish a Reliable Baseline

If you already have a rule-based system, measure that as your baseline. Or use a well-known public model as a reference. Public benchmarks (like MMLU or TruthfulQA) can also show how your model stacks up more broadly. Keep in mind, though, that standardized tests might not reflect real-world usage. That’s why building a smaller, custom dataset of domain-specific examples can be far more revealing.Pilot with Real Users (A/B Testing if Possible)

Rolling out the LLM feature to a small user group is the fastest way to learn about real-world pitfalls. Users might love the model’s accuracy but hate the way it rambles. You might see great performance on standard benchmarks, yet discover your AI refuses too many questions to stay “safe.” Pay close attention to direct user feedback and any sudden drops in engagement or satisfaction metrics.Continuously Monitor and Iterate

LLMs aren’t static. Model updates, API changes, or shifts in user questions can degrade performance over time. Keep an eye on crucial metrics—like how often your chatbot answers correctly—and run periodic evaluations on fresh data. If you see a major drop, investigate promptly. Some teams embed these checks into a continuous integration pipeline, blocking any updates that fail a core suite of tests.

Real-World Insights and Tools That Help

Meta’s Galactica launch is a cautionary tale for anyone who thinks a powerful model alone guarantees success. Galactica sounded authoritative but hallucinated references and, in some cases, spewed toxic content. The uproar forced the demo offline in just three days, underscoring the need to identify failure modes before going live. If you see your model making bizarre errors in private testing, chances are they’ll surface publicly in the worst possible way.

For practical help, you can set up automated evaluations with OpenAI Evals, track outputs through Weights & Biases, and incorporate tasks for human annotators on platforms like Scale AI. Model-graded evaluations—where GPT-4 scores GPT-4’s own output—can speed things up, but always confirm with your own eyes. LLMs can overlook their own blind spots.

Closing Thoughts

Evaluating an LLM can seem overwhelming if you think you need a single perfect metric or test. In reality, it’s about layering different strategies—automated checks, human studies, and domain-specific stress tests—to see where your model excels or fails. By defining success criteria, building a solid baseline, testing with real users, and monitoring performance over time, you create a feedback loop that leads to continuous improvement.

This isn’t just an academic exercise; it’s what protects your product’s reputation, your users’ trust, and your own peace of mind. Despite the hype around AI, users ultimately care about whether your product meets their needs safely and reliably. The more systematic your evaluations, the closer you’ll get to delivering an LLM-powered feature that lives up to its potential without blindsiding you with nasty surprises. Once you set up this framework, you can iterate with confidence and stay one step ahead in a rapidly evolving AI landscape.